This article summarizes how Travis CI was plugged into the GPF-JS GitHub repository

to assess quality on every push.

This article summarizes how Travis CI was plugged into the GPF-JS GitHub repository

to assess quality on every push.

Quality control

Since the beginning of the GPF-JS project, quality is a high priority topic.

Over the years, a complete development and testing framework has been built:

- Development follows TDD principles

- The code is linted with eslint (also with jshint, even if it is redundant)

- The maintainability ratio is computed using plato and verified through custom threshold values

- Testing is done with all supported hosts

- The coverage level is measured and controlled with custom threshold values

- A web dashboard displays the metrics and gives access to the tools

However, these validations were done locally and they had to be run manually before pushing the code.

To enable collaboration and ensure that all pull requests would go to the same validation process, an continuous integration platform was required.

Another approach consists in creating git hooks to force the quality assessment before pushing. However, it requires the submitting platform to be properly configured. Having a central place where all the tests are being run guarantees the correct and unbiased execution of the tests.

Travis CI

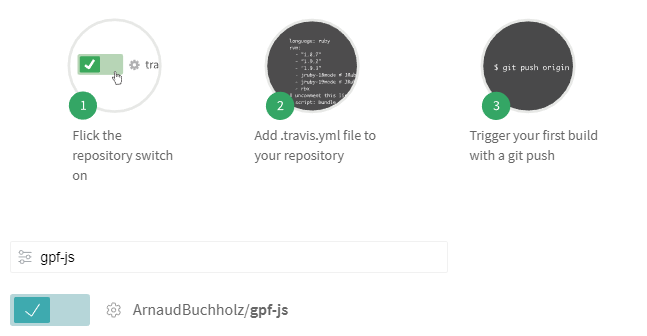

Travis-CI is a free solution that integrates smoothly with GitHub. Actually, the GitHub credentials are used to login to the platform.

After authentication, all the repositories are made available to Travis and each one can be enabled individually.

The selected repositories must be configured to instruct Travis on how the builds are done. To put it in a nutshell, a new file (.travis.yml) must be added.

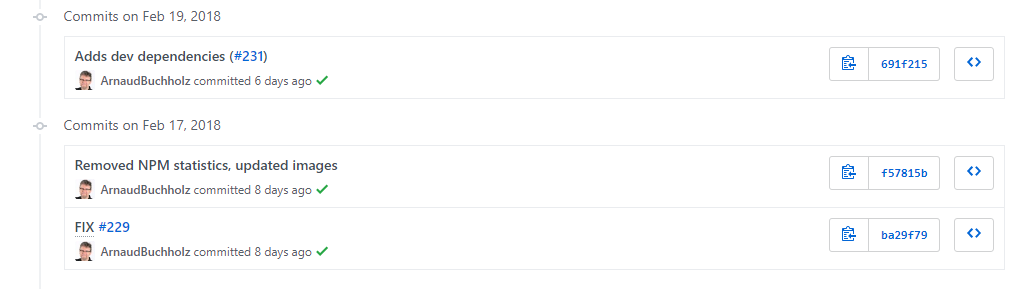

Once everything is put together, the platform will monitor pushes and trigger builds automatically. Eventually, it documents the push in GitHub with a flag indicating if the build succeeded.

When opening the Travis website, the user is welcomed with a nice dashboard listing the enabled repositories with their respective status.

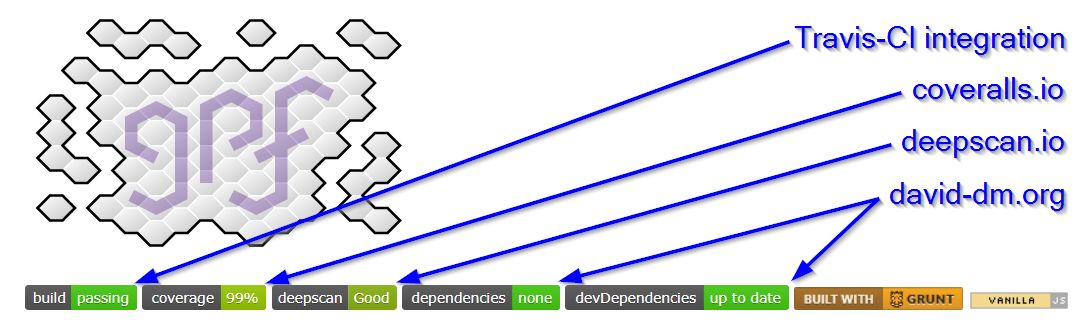

The project README can be modified to show the last build result using an image delivered by the Travis infrastructure:

Changes in GPF-JS

.travis.yml

The .yml extension stands for YAML file: a structured format that is commonly used for configuration files. In the context of Travis, it describes the requirements and steps to build and validate the project.

Requirements

One obvious requirement of the GPF-JS development environment is NodeJS. This is configured by defining the language setting and selecting the Long Term Support version.

Chrome is also required to enable browser testing.

Grunt is installed using the proper command line.

The remaining requirements are described by the project dependencies and are installed through NPM.

Steps

The default step being executed by Travis is npm test

The resulting command line is specified in the package.json file; it executes grunt with the following tasks:

- connectIf to setup a local web server necessary for browser and gpf.http testing

- concurrent:source to execute all tests with the source version (see library testing tutorial)

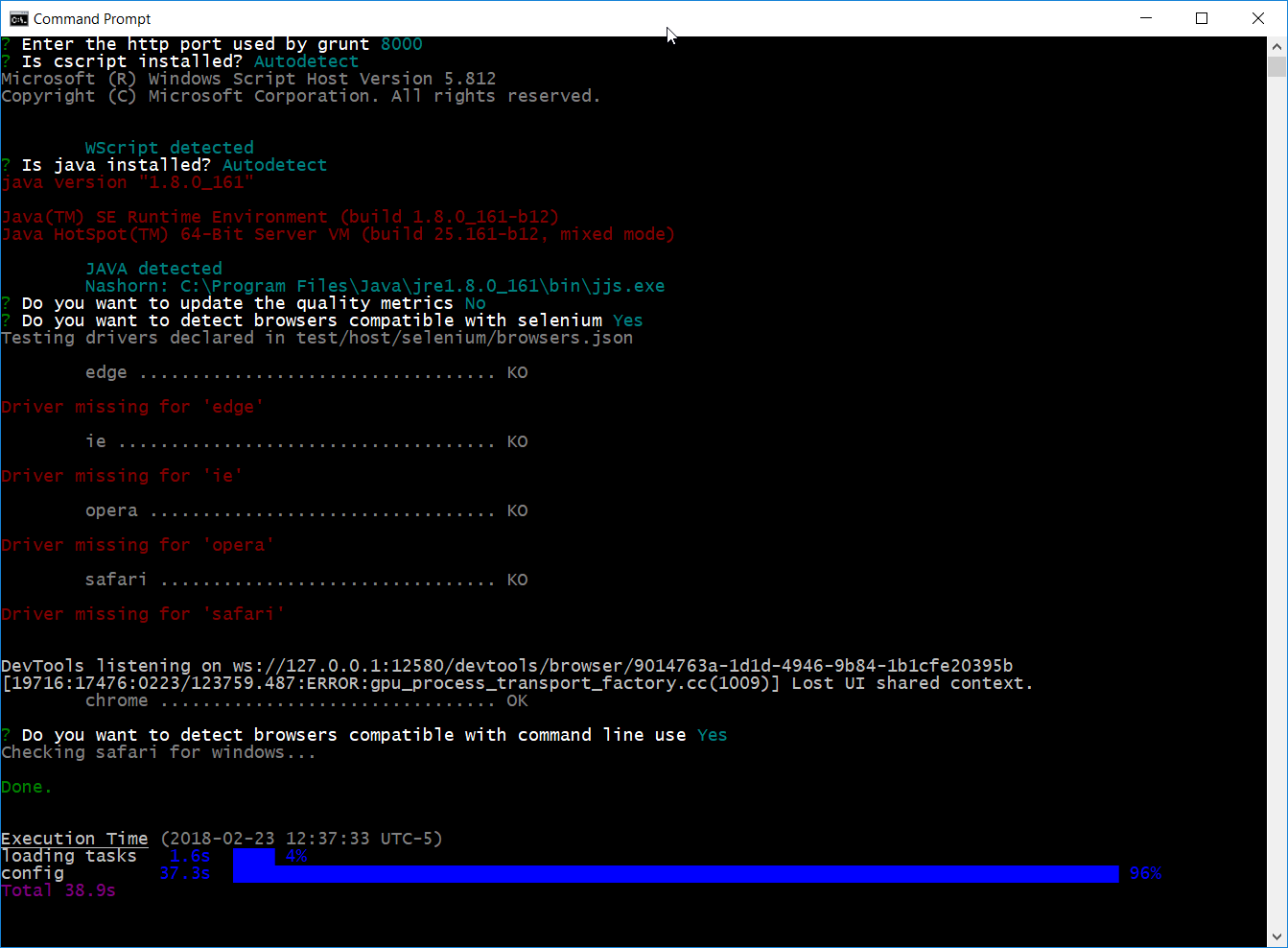

But, first, the GPF-JS development environment requires a configuration file to be generated: it contains the list of detected hosts, the metrics thresholds and the HTTP port to be used to serve files.

Configuration

The configuration file is interactively created the very first time the grunt command is being executed. Indeed, the user input is expected to approve or change some values (such as the HTTP port).

However, on Travis, no user can answer the questions. Hence, the tool must be fully automatic.

When grunt is triggered, the gruntfile.js first checks if any configuration file already exist. If not, a default task takes place to run the configuration tool.

To support Travis, this default task processing was isolated in a folder of 'first-launch' configuration tasks. The name of the file is used to name the task.

The configuration tool was then modified to handle a so-called quiet mode and the Travis configuration task leverages this option. Additionally, the task also executes the quality tasks (linters, plato & coverage).

So, the command grunt travis is executed before the testing step to ensure the existence of the configuration file.

Challenges

After figuring out the different steps, a long debugging session - mostly composed of try and fail cycles - was required to figure out the specificities of the platform.

The history of pushes on the Travis integration issue illustrates all the attempts that were made.

Non-Windows environment

"If it's not tested, it doesn't work"

The very first issue was related to the environment itself. The development being made on Windows, the library has never been tested with a different operating system before.

Travis offers the possibility to use different flavors but the default one is Linux.

As a consequence, some scripts were adapted to handle the differences in the path separator (for instance).

NodeJS can absorb those differences. This is the reason why the library uses the / as a path separator but translates back to \ when running on Windows-specific host.

Browser testing

Another issue was related to browser testing. As a matter of fact, the Travis environment does not provide any graphical user interface by default. This being said, it means that you can't start a browser.

Fortunately, this is explained in the Travis documentation where several options are proposed.

And, luckily, the GPF-JS development environment provides an alternate way to test browsers.

Consequently, the headless mode of chrome is used.

Wscript

The operating system being Linux, the Microsoft Scripting Host is not available.

Unlike path separators, it is a normal situation as the configuration file includes a flag that is set upon detection of this scripting host.

However, one unexpected effect is bound to the code coverage measurement: a big part of the library is dedicated to bring older hosts to a common feature set and WScript was running most of it. Thus, the coverage minimum was not reached and the build was failing.

This was temporarily addressed by disabling the coverage threshold errors.

Also, thanks to the Wscript emulator project from Mischa Rodermond, a simple simulator implemented with node validates the WScript specifics.

There are still some uncovered part, but they will be fixed in a future release.

Other goodies

After digging through GitHub projects, I also discovered and integrated the coveralls package which can be used in conjunction with Travis so that coverage statistics are uploaded on a dedicated board.

I also added a dependency checker as well as a code quality checker (which required some cleaning).

Everything is visible from the project readme.

No comments:

Post a Comment