Release 0.2.8: Serialization attributes

Release content

A longer release

As explained in the last release notes, I am concentrating on a side project and the library evolved to support its development.

In the meantime, other projects (mockserver-server and node-ui5) were started since interesting challenges were submitted over the last month. Not to mention that more documentation was requested on the linting rules but also on the evolution of the library statistics.

As a consequence, this release took more time than usual (around 4 months).

Asynchronous helpers

Interface wrappers

When the XML serialization was introduced, a generic wrapper was required to simplify the use of the IXmlContentHandler interface.

The new function gpf.interfaces.promisify builds a factory method that takes an object implementing the given interface. This method returns a wrapper exposing the interface methods but returning chainable promises.

To put it in a nutshell, it converts this code:

const

writer = new gpf.xml.Writer(),

output = new gpf.stream.WritableString();

gpf.stream.pipe(writer, output).then(() => {

console.log(output.toString());

});

writer.startDocument()

.then(() => writer.startElement("document"))

.then(() => writer.startElement("a"))

.then(() => writer.startElement("b"))

.then(() => writer.endElement())

.then(() => writer.endElement())

.then(() => writer.startElement("c"))

.then(() => writer.endElement())

.then(() => writer.endElement())

.then(() => writer.endDocument());

into this code:

const

writer = new gpf.xml.Writer(),

output = new gpf.stream.WritableString(),

IXmlContentHandler = gpf.interfaces.IXmlContentHandler,

xmlContentHandler = gpf.interfaces.promisify(IXmlContentHandler),

promisifiedWriter = xmlContentHandler(writer);

gpf.stream.pipe(writer, output).then(() => {

console.log(output.toString());

});

promisifiedWriter.startDocument()

.startElement("document")

.startElement("a")

.startElement("b")

.endElement()

.endElement()

.startElement("c")

.endElement()

.endElement()

.endDocument();

When using this wrapper, it quickly appeared that something was missing. It sometimes happens that the chain is broken by a normal promise. The wrapper was modified to deal with it.

promisifiedWriter.startDocument()

.startElement("document")

.startElement("a")

.startElement("b")

.then(() => anyMethodReturningAPromise())

.endElement()

.endElement()

.startElement("c")

.endElement()

.endElement()

.endDocument();

The best example of use is $metadata implementation of the side project.

gpf.forEachAsync

There are many solutions to handle loops with promises.

Since the library offers iteration helpers (gpf.forEach), it made sense to provide the equivalent for asynchronous callback: gpf.forEachAsync. It obviously returns a promise resolved when the loop is over.

$singleton

Among the design patterns, the singleton is probably the most easy to describe and implement.

Here again, there are many ways to implement a singleton in JavaScript.

In the library, an entity definition may include the $singleton property. When used, any attempt to create a new instance of the entity will return the same instance.

The singleton is allocated the first time it is instantiated.

For instance: var counter = 0,

Singleton = gpf.define({

$class: "mySingleton",

$singleton: true,

constructor: function () {

this.value = ++counter;

}

});

var instance1 = new Singleton();

var instance2 = new Singleton();

assert(instance1.value === 1);

assert(instance2.value === 1);

assert(instance1 === instance2);

Serialization and validation attributes

A good way to describe these features is to start with the use case. As explained before, this release was made to support the development of a side project. Simply put, it consists in a JavaScript full stack application composed of:

- An OpenUI5 interface

- A NodeJS server exposing an ODATA service

There are many UI frameworks out there. I decided to go with OpenUI5 for two reasons: the user interface is fairly simple and I want it to be responsive and look professional. Furthermore, it comes with OPA that will allow - in this particular case - end 2 end test automation.

Since I am a lazy developer building a backend on top of express, flexibility is mandatory so that adding a new entity / property does not imply changes all across the project.

Indeed, a new property means that:

- The schema must be updated so that the UI is aware of it

- Serialization (reading from / writing to client) must be adapted to handle the new property

- Depending on the property type, the value might be converted (in particular for date/time)

- It may (or may not) support filtering / sorting

- ...

gpf.attributes.Serializable

In this project, the main entity is a Record.

Since a class is defined to handle the instances, it makes sense to rely on its definition to determine what is exposed. However, we might need a bit of control on which members are exposed and how.

This is a perfect use case for attributes.

The gpf.attributes.Serializable attribute describes the name and type as well as indicates if the property is required.

For instance, the _name property is exposed as the string field named "name".

The required part is not yet leveraged but it will be used to validate the entities.

This definition is documented in the structure gpf.typedef.serializableProperty.

Today, only three types are supported:

gpf.serial

Once the members are flagged with the Serializable attribute, some helpers were created to utilize this information.

gpf.serial.get returns a dictionary indexing the Serializable attributes per the class member name.

Also, two methods convert/read the instance into/from a simpler object containing only the serializable properties:

These methods include a converter callback to enable value conversion.

For instance: var raw = gpf.serial.toRaw(entity, (value, property) => {

if (gpf.serial.types.datetime === property.type) {

if (value) {

return '/Date(' + value.getTime() + ')/'

} else {

return null

}

}

if (property.name === 'tags') {

return value.join(' ')

}

return value

})

attributes restrictions

If you read carefully the documentation of the gpf.attributes.Serializable attribute, you may notice the section named Usage restriction.

It mentions:

If you check the code:

var _gpfAttributesSerializable = _gpfDefine({

$class: "gpf.attributes.Serializable",

$extend: _gpfAttribute,

$attributes: [

new _gpfAttributesMemberAttribute(),

new _gpfAttributesUniqueAttribute()

],

This means that the Serializable attribute can be used only on class members and only once (per class member).

This also means that new attribute classes were designed to secure the use of attributes. This will facilitate the adoption of the mechanism since any misuse of an attribute will generate an error. It is a better approach than having no effect and not letting the developer know.

The validation attributes are:

Actually, ClassAttribute, MemberAttribute and UniqueAttribute are singletons.

Obviously, these attributes are also validated, check their documentation and implementation.

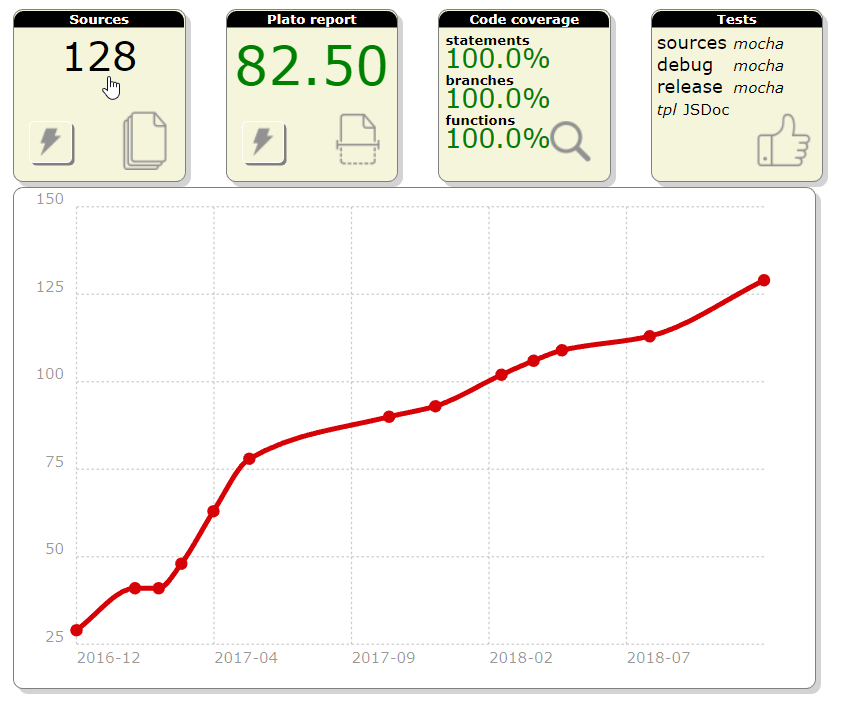

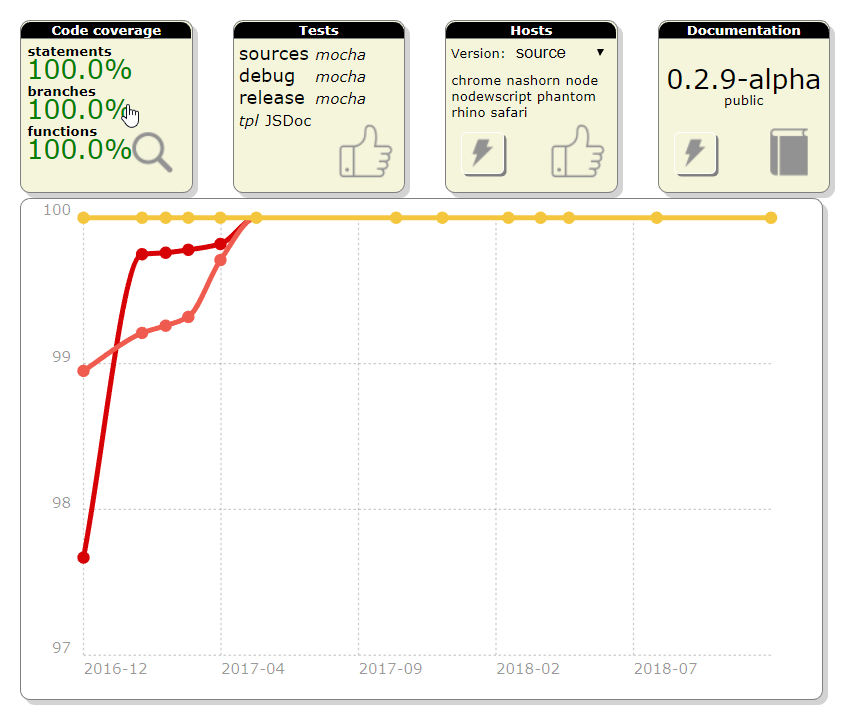

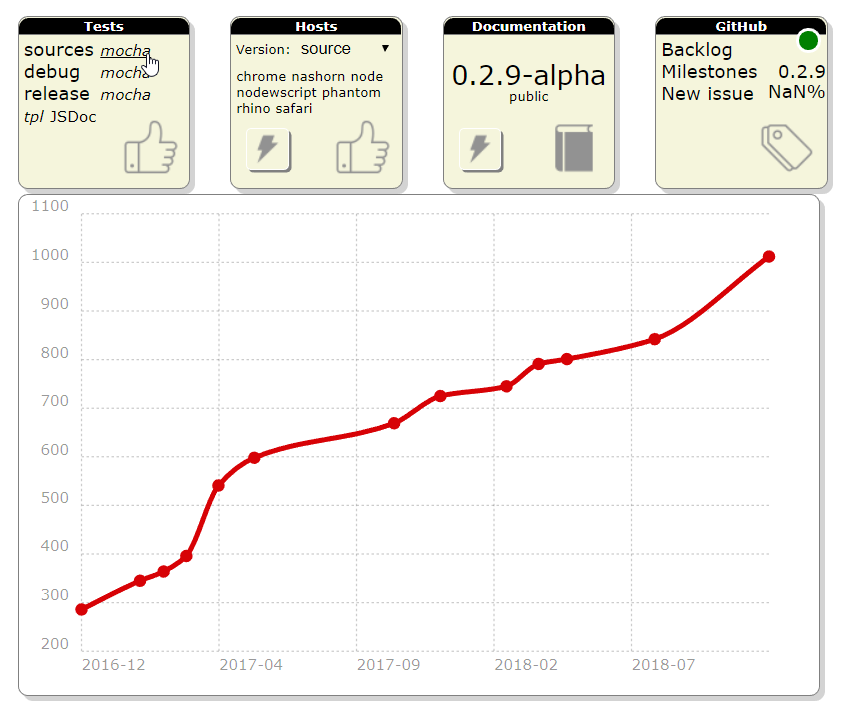

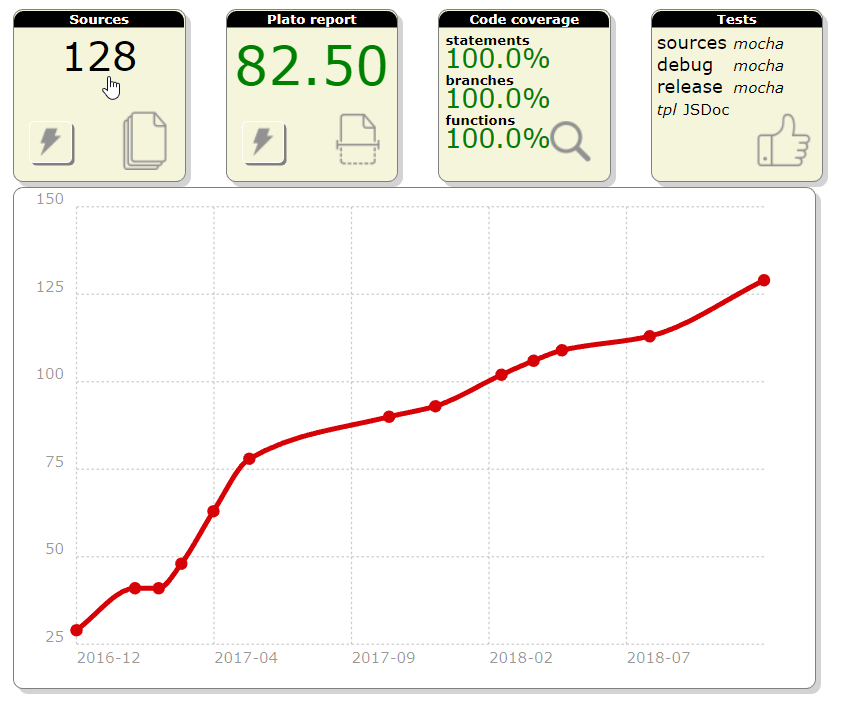

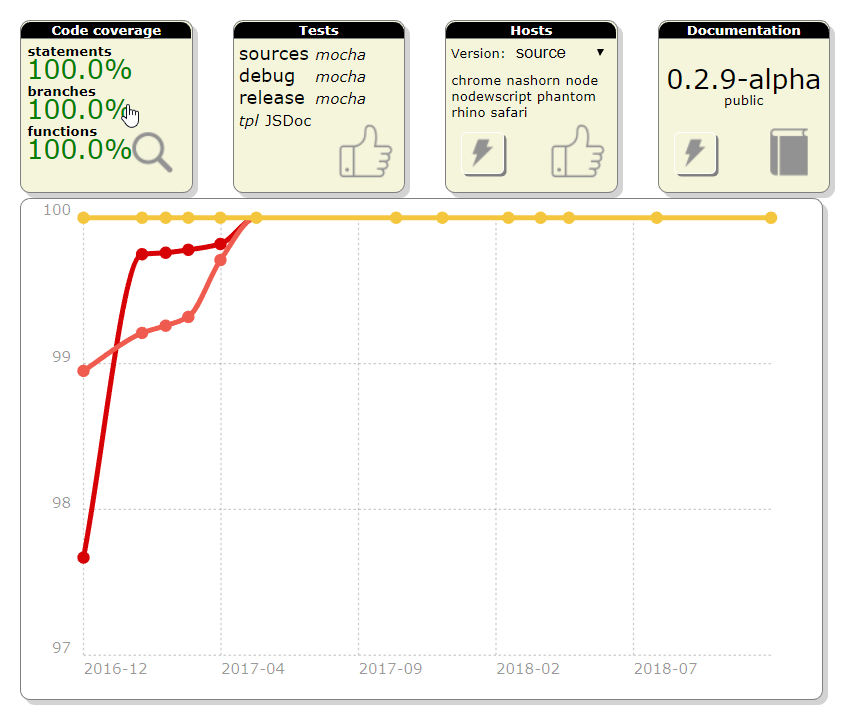

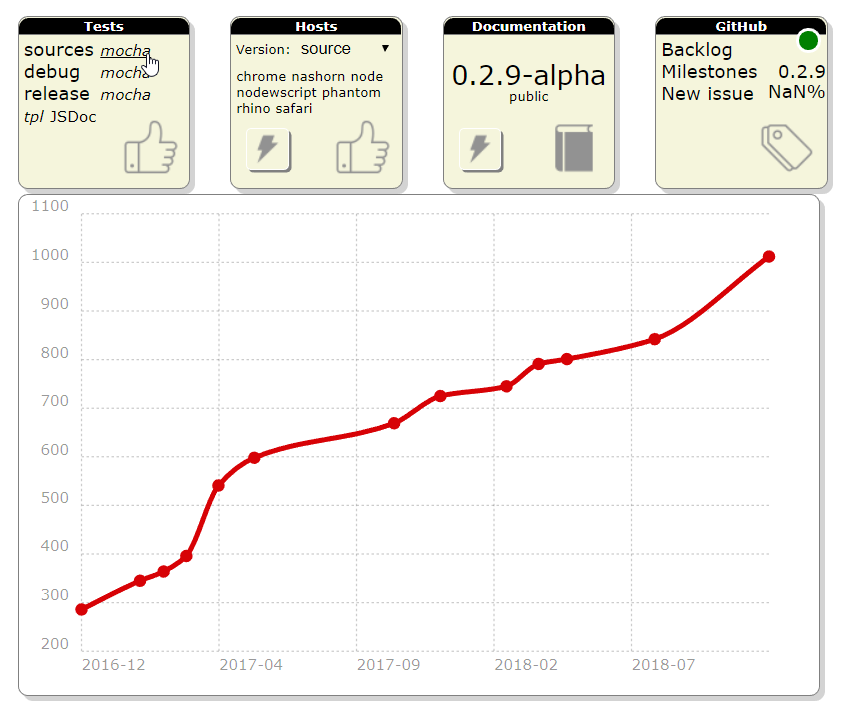

Project metrics reporting

Two years ago, the release 0.1.5 named "The new core" marked the beginning of a new development start for the library. There are few traces of what happened before as the project was not structured. Since then, the project metrics were systematically added to the Readme.

With release 0.2.3, all these metrics were consolidated into one single file: releases.json. This file is automatically updated by the release script.

Using chartist.js, the dashboard tiles were modified to render a chart showing the progression of the metrics over the releases.

sources

plato

coverage

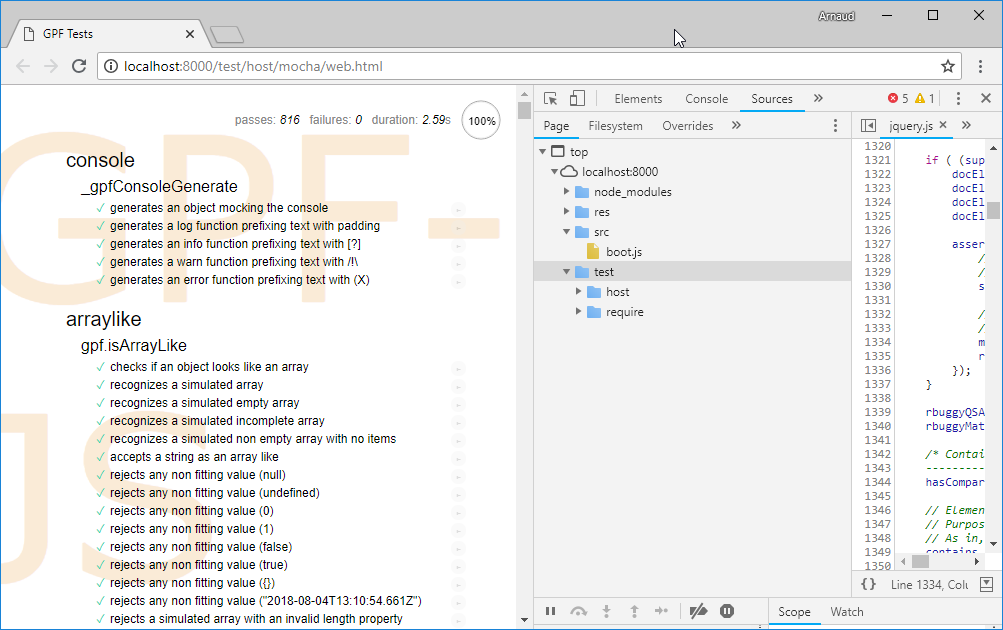

tests

Documentation of ESLint rules

Automated documentation

Linting is used to statically validate the source code since the beginning of the project. The set of eslint rules has been refined over the releases and critical settings framed the way the sources look like.

Furthermore, the linter also evolves with time (and feedback) and some rules become obsolete as new ones are introduced.

In the end, it is really challenging to stay up-to-date and provide clear and complete explanations on the different rules that are configured (and why they are configured this way).

These are the problems that were addressed with the task #280.

As a result, a script leverages eslint's rules documentation to extract and validate the library settings. When needed, some details are provided.

The final result appears in the documentation in the Tutorials\Linting menu

no-magic-numbers

While documenting the rules, the no-magic-numbers one stood out.

I wanted to understand how this rule would (could?) improve the code. It was enabled to see how many magic numbers existed. Realizing that this generates a huge amount of errors, the check was turned off for test filesto start with).

Some people like to distinguish warnings and errors. However warnings do not call for action. As a result, they tend to last forever leading to the broken window effect. I prefer a binary approach meaning it is either OK or not OK.

It took almost one month of refactoring to remove them but, in the end, it did improve the code and lessons were learned.

This also demonstrated the value of having 100% of test coverage.

Lessons learned

Library + application

This may sound obvious but using the library as a support for an application gives immediate feedback on how the API is appropriate. It helps to keep the focus on how practical the methods are.

For instance, the helper gpf.serial.get was integrated in the library because its 10 little lines of code were repeated in the application.

Refactoring

It is not the first time that the whole library requires refactoring. And I actually like the exercise because it gives the opportunity to come back on old code that hasn't been touched in a while. Since the project started several years ago, my knowledge and skill have evolved and it gives a new look on the sources. Furthermore, the code being fully tested, there are very little risks.

When dealing with magic numbers, I realized that some patterns were obsolete because of JavaScript methods I was not used to. As the library offers a compatibility layer, it has been enriched with these new methods and the code modified consequently.

For instance: if (string.indexOf(otherString) === 0) is better replaced with: if (string.startsWith(otherString))

The same way: if (string.indexOf(otherString) !== -1) should be using: if (string.includes(otherString))

Last example, regular expressions are widely used with capturing groups. Their value is available in the array-like result through indexes. Using constants rather than numbers to get these values improves the code readability.

Next release

The next release content is not completely defined. There are plans to expand the use of attributes to ES6 classes and to integrate graaljs.

For the rest, it will depend on the side project since it needs all my attention.

This release is required for the first application based on the library.

This release is required for the first application based on the library.

According to wikipedia, a particle accelerator is a machine that uses eletromagnetic fields to propel charged

particles to very high speed and energies. A collider accelerator causes them to collide head-on, creating

observable results scientists can learn from.

According to wikipedia, a particle accelerator is a machine that uses eletromagnetic fields to propel charged

particles to very high speed and energies. A collider accelerator causes them to collide head-on, creating

observable results scientists can learn from.