This new release delivers clean foundation to build the library in a proper (and faster?) way.

In this article, I will detail all the tooling that were built and what is the road map

for the next releases.

This new release delivers clean foundation to build the library in a proper (and faster?) way.

In this article, I will detail all the tooling that were built and what is the road map

for the next releases.

It's finally out !

Almost two years ago, I was experimenting NPM publication and the version 0.1.4 went out.

At that time, I had no clear road map or even a vision of what I wanted to do with the GPF-JS library. Long story short, I was trying to consolidate my JavaScript know-how in order to re-create (in a better way) a library that I started in a previous company.

If you check the package.json history, version 0.1.5 'officially' started on November 26th, 2015. This was after I added some grunt packages to automate linting (jshint and ESlint) as well as testing (mocha 1, 2 and istanbul 1, 2).

Clearly, the goal shifted from coding to automating, testing and checking quality. And that probably explains why I needed a full year to achieve this version.

What's inside?

Well... that's embarrassing but... almost nothing. Indeed, if you check the documentation, only few functions and one class are available for now.

At least, the library provides a compatibility layer for all supported environments.

But, still, you might wonder: what did I spend my year on?

To put it in a nutshell, I focused more on the how than on the what.

From Grunt command line to Web interface

Grunt has been implemented to automate lots of tasks.

When I use grunt --help, the following commands are listed: concurrent Run grunt tasks concurrently *

connect Start a connect web server. *

copy Copy files. *

jshint Validate files with JSHint. *

uglify Minify files with UglifyJS. *

watch Run predefined tasks whenever watched files change.

eslint Validate files with ESLint *

exec Execute shell commands. *

htmllint HTML5 linter and validator. *

instrument instruments a file or a directory tree

reloadTasks override instrumented tasks

storeCoverage store coverage from global

makeReport make coverage report

coverage check coverage thresholds *

jsdoc Generates source documentation using jsdoc *

mocha Run Mocha unit tests in a headless PhantomJS instance. *

mochaTest Run node unit tests with Mocha *

notify Show an arbitrary notification whenever you need. *

notify_hooks Config the automatic notification hooks.

chrome Alias for "connectIf", "exec:testChromeVerbose" tasks.

firefox Alias for "connectIf", "exec:testFirefoxVerbose" tasks.

ie Alias for "connectIf", "exec:testIeVerbose" tasks.

check Alias for "exec:globals", "concurrent:linters",

"concurrent:quality", "exec:metrics" tasks.

connectIf Run connect if not detected

default Alias for "serve" task.

fixInstrument Custom task.

istanbul Alias for "instrument", "fixInstrument", "copy:sourcesJson",

"mochaTest:coverage", "storeCoverage", "makeReport", "coverage"

tasks.

make Alias for "exec:version", "check", "jsdoc:public", "connectIf",

"concurrent:source", "exec:buildDebug", "exec:buildRelease",

"uglify:buildRelease", "exec:fixUglify", "concurrent:debug",

"concurrent:release", "uglify:buildTests",

"copy:publishVersionPlato", "copy:publishVersion",

"copy:publishVersionDoc", "copy:publishTest" tasks.

plato Alias for "copy:getPlatoHistory", "exec:plato" tasks.

node Custom task.

phantom Custom task.

rhino Custom task.

wscript Custom task.

pre-serve Custom task.

serve Alias for "pre-serve", "connect:server", "watch" tasks.

The exec task also has 27 sub configurations...

As I am too lazy to remember (or even type) all the grunt tasks, I decided to create a small web interface that would offer me all the commands I need in one click.

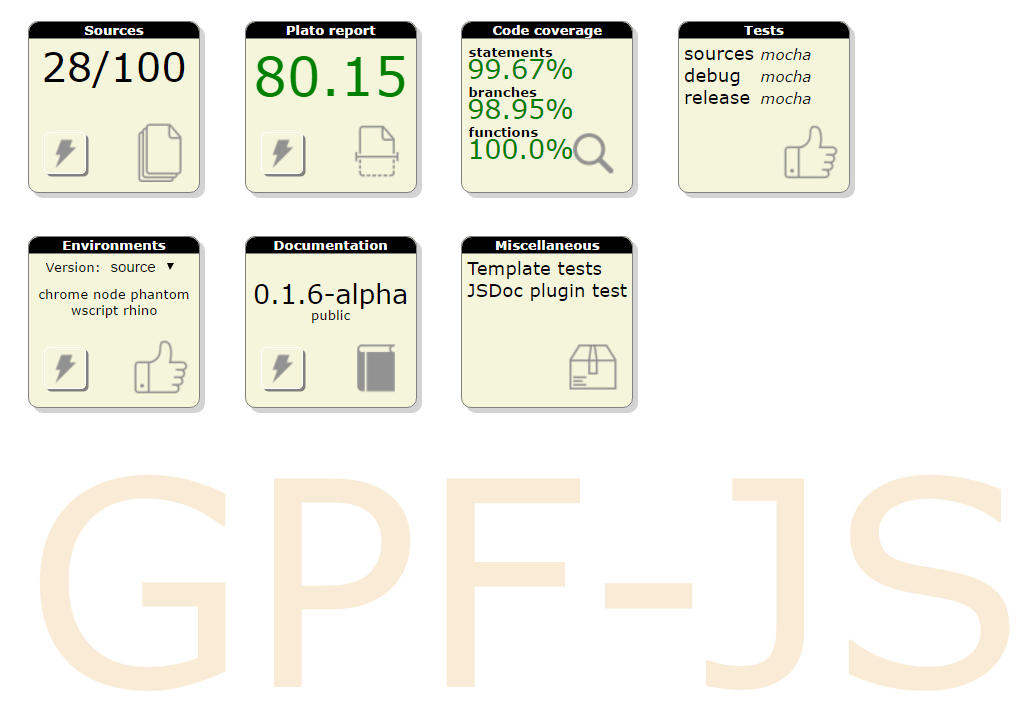

When you install the project and run grunt (see readme), a browser will pop to display this page:

It will be empty at first but this will be improved.

The magic happens when you click the buttons or links. They are simple hyperlinks to URL like:

http://localhost:8000/grunt/make

This one triggers the grunt task named make.

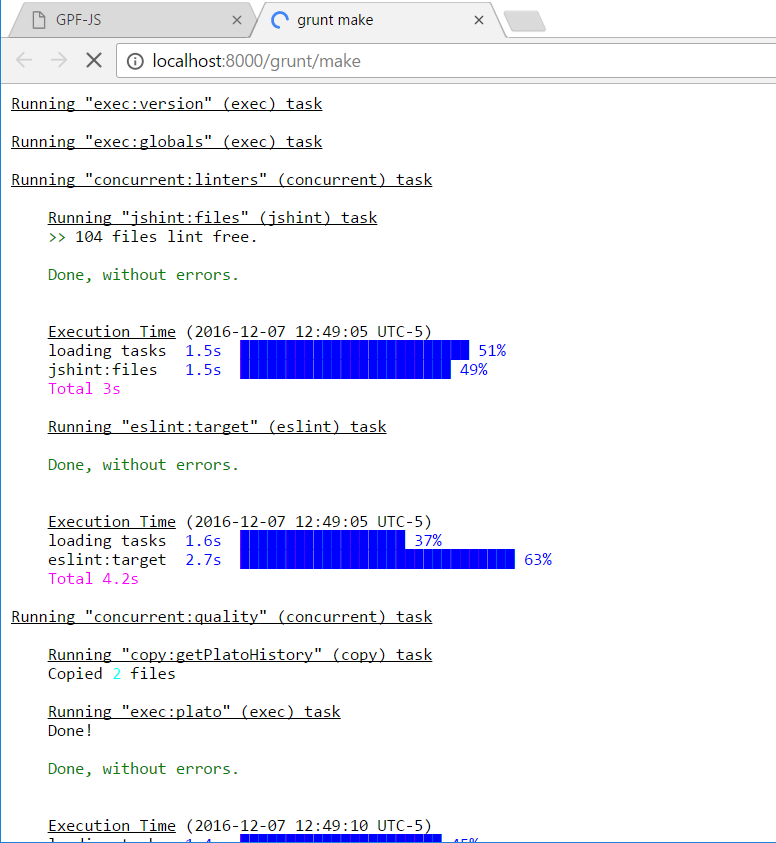

While being executed in the background, any output generated by the task is parsed for formatting and sent back to the browser. As a result, you can trace the task execution in real time:

From an implementation point of view, I added a middleware to the connect task.

This is not the code I am the most proud of... but it works. I am planning to improve this code as soon as the library will offer decent parsing helpers.

Source management

I briefly explained my issues with source management and the reason why I needed a template mechanism. After implementing my own template engine, I created a page that allows me to quickly enable / disable sources and reorganize them (using drag & drop).

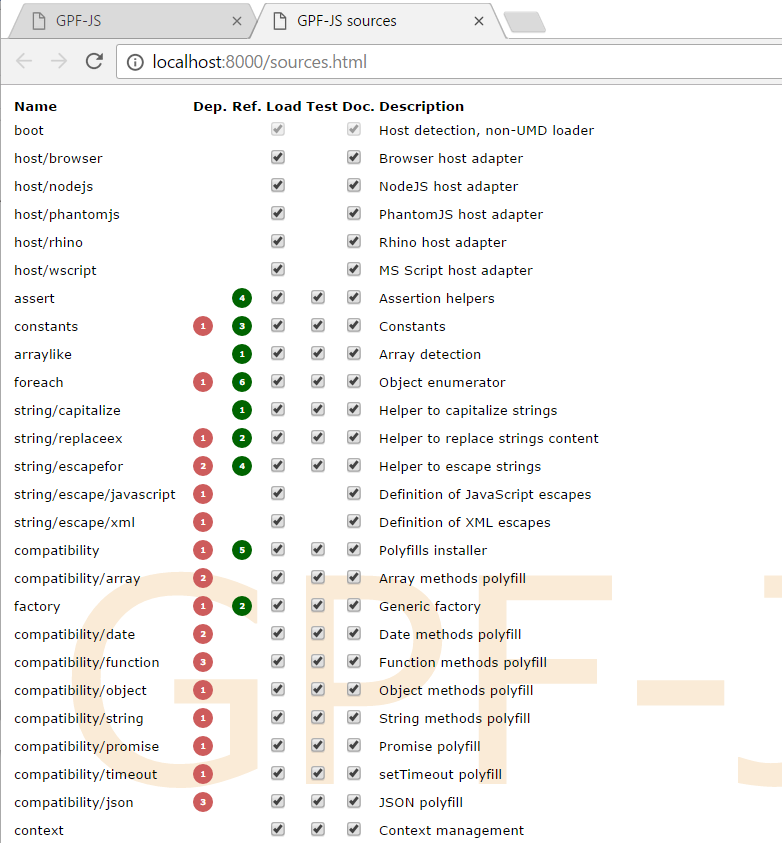

The tile titled "Sources" shows the number of active source compared to the total number of sources. If you click it, you access the list.

In front of each source, you have access to:

- Dependencies analysis: the red bubble shows the count of sources the current one depends on and the green bubble shows the count of sources depending on this one. Each bubble details the dependencies inside a tooltip

- Load checkbox: the source will be part of the library when ticked

- Test checkbox: it appears only if a matching test file exists and it configures if it is included in the test suite

- Doc checkbox: jsdoc integration appeared very late, I wanted to be able to control which files the documentation is extracted from

- Description: this is directly extracted from the source by searching the @file comment

As of today, only 28 are part of the library for a total of 100 existing sources. Indeed, because quality is measured, I was looking for an easy way to exclude files without physically remove them from the project.

All these files accesses are implemented through another middleware to the connect task. It implements basic CRUD methods on the file system.

Well, Delete is not yet enabled because I didn't need it.

You might also have valid concerns about security as this middleware not only allows reads but also updates. I will add an extra path checking algorithm to make sure that only project files are available. As they are backed up by git, those files can be easily restored if anything goes wrong.

As the complexity of the sources.json file constantly grows, this tool rapidly demonstrated value. I recently had to re-organize the order, this was done in a blink!

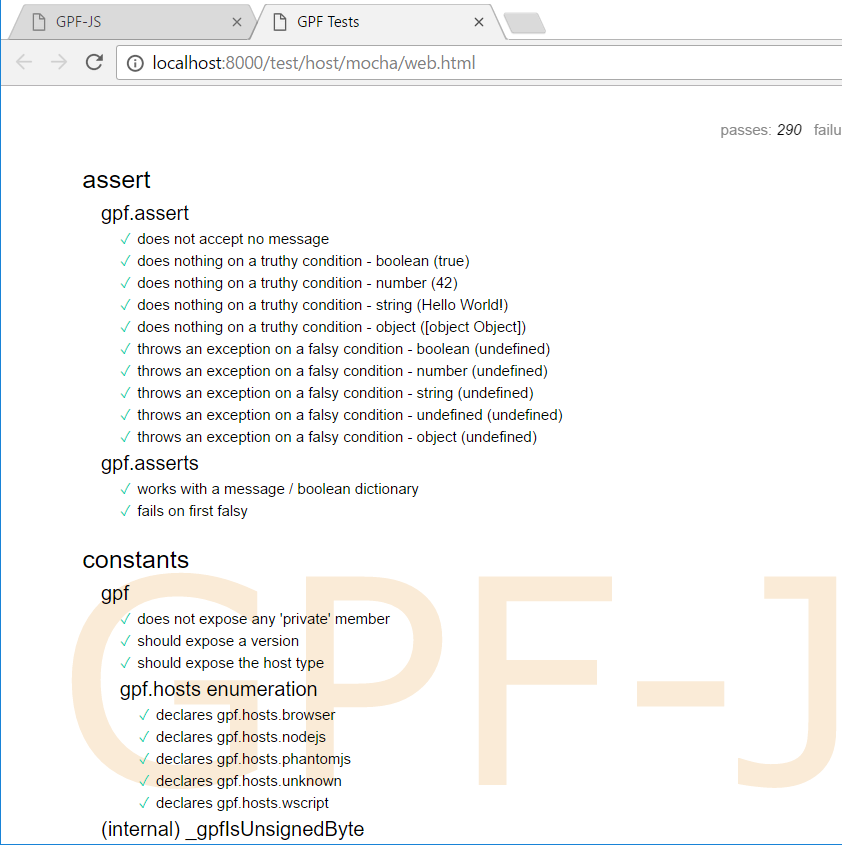

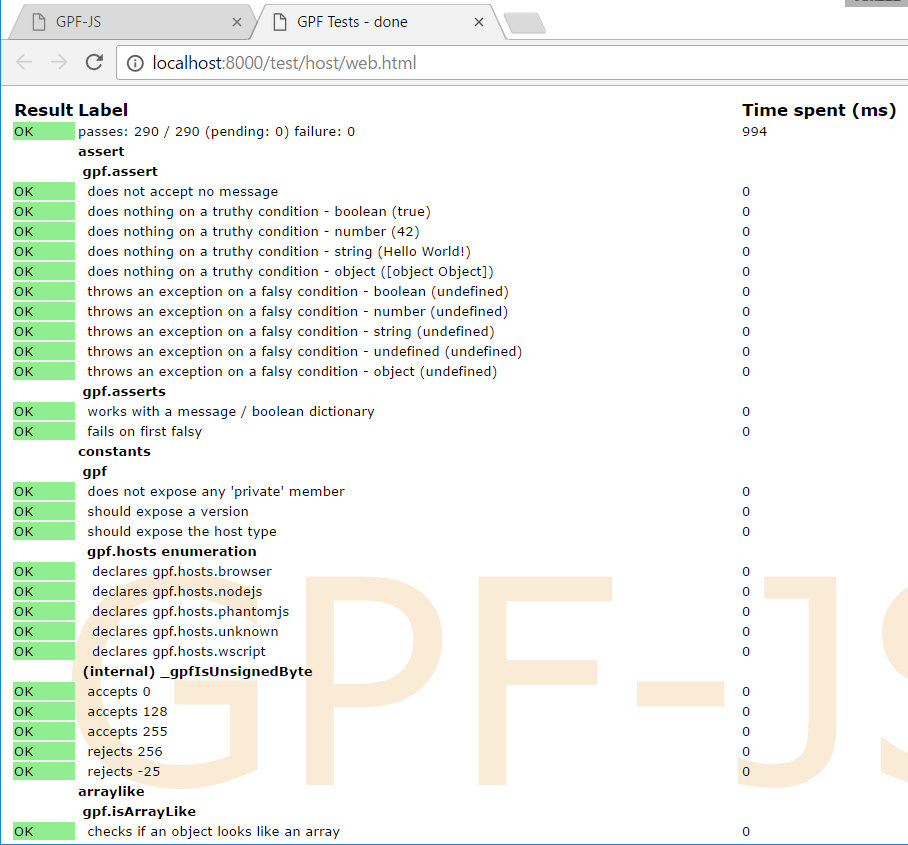

Testing

I am constantly advocating for Test Driven Development. As a consequence, there was no way I could release this version without the necessary tooling to achieve it.

All the available environment can be tested, this is why the tile named "Environments" was created. But I usually go with mocha & my bdd implementation inside the browser. So I created a second tile named "Tests".

Mocha in a browser BDD in a browser

BDD in a browser

Selenium

Manual testing in a browser is one thing but it is even better when it is fully automated.

So I implemented Selenium to manipulate browsers and I wrote an explanation to configure it.

I had to create three helper files to deal with selenium drivers:

- detectSelenium.js: which is going over the list of possible drivers (see selenium.json) and try to instantiate each of them. As a result, a file is generated in the tmp folder and it determines what can be used on the current host (grunt tasks will be dynamically generated from this file).

- seleniumDriverFactory.js: which is responsible of initializing and configuring the driver for the selected browser

- Once the selenium tests are made browser-agnostic, the selenium.js program executes the tests and waits for the result.

This can be triggered through grunt tasks and it has been integrated in the build process (so that it fails if anything goes wrong).

Backward compatibility

Each release comes with several files:

- gpf.js: the minified library (see below to see how this version is built), version 0.1.5

- gpf-debug.js: the concatenated library (with comments), version 0.1.5

- test.js: the minified concatenation of all test files, version 0.1.5

As it is important to ensure the backward compatibility of the API, I have some plans to keep track of all release tests files in order to check them constantly.

Developing tests

I would have some funny stories to tell about test development...

But this will be a long article so I will only give some advices learned the hard way:

- Tests are a critical part of the project. The test code must be clean and easily maintainable. When something is broken after a modification, you will be happy if you can quickly identify the reason from the tests.

- Asynchronous testing is complex, never take any assumption on the performance of the host running your tests. When I developed the timeout ones, I had some hard times understanding that the timer resolution does not allow me to consider intervals that are smaller than 10ms. Also, I had to make sure that concurrent timeouts are triggered simultaneously.

- Testing the internal logic of the library might be necessary: the public API rely on internal helpers. This is also true when the library supports different platforms but only one is used for code coverage (NodeJS in my case). I decided to expose those internals when using the source version. A good example is the compatibility layer: NodeJS and most browsers support all the modern API but Rhino or cscript don't. Hence, I had to develop tests that are capable of checking both version (native and polyfill).

Code coverage

I decided to go with istanbul for code coverage. I also evaluated Blanket.JS (see my training on JavaScript functions using stubs) but the first one offers more flexibility.

The code coverage is evaluated by running the tests on the source version (see build process). Some threshold values are defined to determine if the files satisfy the expectations regarding the minimum coverage. If not, the build process fails.

Ignoring untested path

There are almost 41 use of istanbul ignore in the sources. For instance, the host detection algorithm inside boot.js can't be fully covered because NodeJS goes only through one branch.

Each comment must be followed by an explanation of its purpose. I wrote a documentation on this topic.

To be fully transparent, I detail the coverage inside the readme file.

Fixing instrumentation

Most of the time, code coverage rely on source instrumentation: this step is required to add instructions in the source code and keep track of what has been executed.

Blanket.JS does it on the fly

For istanbul, a container variable is declared at the beginning of each modified source and this variable is referenced everywhere.

"use strict";

var __cov_wAQFT3LPP9UQX7F5lrKtpA = (Function('return this'))();

if (!__cov_wAQFT3LPP9UQX7F5lrKtpA.__coverage__) {

__cov_wAQFT3LPP9UQX7F5lrKtpA.__coverage__ = {};

}

__cov_wAQFT3LPP9UQX7F5lrKtpA = __cov_wAQFT3LPP9UQX7F5lrKtpA.__coverage__;

/* ... */

__cov_wAQFT3LPP9UQX7F5lrKtpA.s['1']++;

_gpfExtend(gpf, {

clone: function (obj) {

__cov_wAQFT3LPP9UQX7F5lrKtpA.f['1']++;

__cov_wAQFT3LPP9UQX7F5lrKtpA.s['2']++;

return JSON.parse(JSON.stringify(obj));

}

});

If you have read the other articles (in particular the template mechanism one), you know that I like doing code generation. Sometimes, I rely on a function that is converted to string, altered and converted back to a function.

One annoying consequence of this method is that the newly created function can't use any variable declared outside of its scope. There are some workarounds such as passing those variables to a function factory. One good example is the polyfill for bind.

However, things get more complicated when you don't know that the created function requires variables because it was modified by code coverage instrumentation... This one gave me some headaches...

Once I understood the issue, the solution became obvious: I had to make sure that those container remain available even if the function is dynamically created. I modified the task to add them to the NodeJS global dictionary.

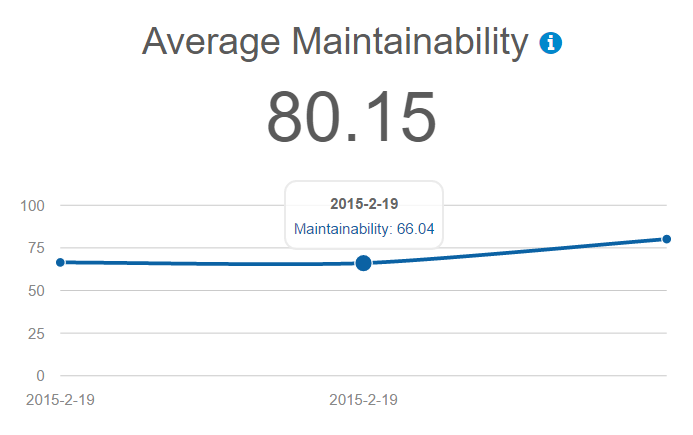

Quality with Plato

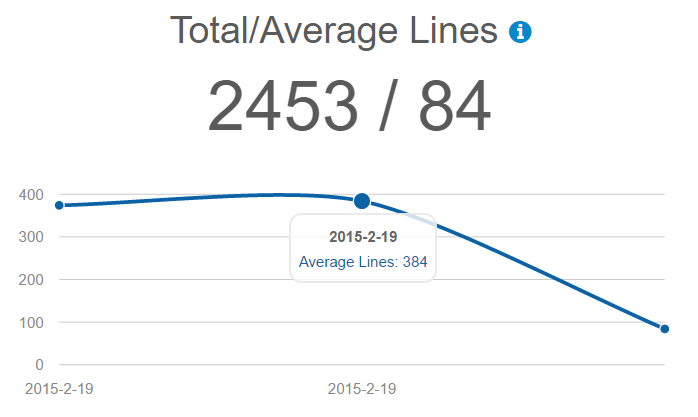

Plato is probably the tool that really changed the way I develop the library. I use it to measure the quality of the project.

Below you can see the evolution of the main criteria.

Note that the measure taken by the 19th of February was done on all the files. Now it is done only with the files included in the library.

On top of global metrics, a report is generated for each file, showing which functions are the most complex. This gives you valuable hint on where you should put your efforts to make the file more maintainable.

You can check the version 0.1.5 analysis.

Again, I defined a minimum maintainability value which fails the build process if one source does not respect it.

Documentation

A good library is a documented one. Writing documentation and making sure it is up-to-date is a painful process and the more you can automate, the better it is. Luckily we, JavaScript developers, can use jsdoc to extract relevant information from the sources.

Documentation for version 0.1.5 can be accessed here.

Improved automation

Did I mention I am lazy? I also hate repeating myself and I do follow the DRY principle.

That's why I created my own jsdoc plugin to avoid repetition and automate obvious information such as:

- Private accessibility when the function / member name starts with an underscore

- Member types from their default value

- Custom tags

This plugin also allowed me to generate extensive documentation on errors based on the _gpfErrorDeclare instruction.

An article will come soon...

Development process

Following TDD, I develop the tests first. Then, I start the implementation until the test succeeds.

To help me in that task, I modified the grunt tasks watch and serve to monitor the src folder.

Every modified file triggers the linters and plato. Soon, it will also trigger the right test.

In the mean time, I just refresh my test page in the browser.

Build process

The library offers three flavors:

- Source version: this version is used for development, it loads all the sources files one by one and permits the access to the internals of the library.

- debug version: this version is generated from the sources. It is built almost by concatenating the files after small transformations. A first step of preprocessing deals with special comments like

/*#ifdef(DEBUG)*/. Then a step of AST transformation done with esprima is used to inject the sources inside the Universal Module Definition. The resulting AST is converted back to JavaScript using escodegen. The whole processed is configured with a file.

- release version: it uses almost the same process than the debug version but with a different configuration file. Then a step of minification is triggered.

I have some ideas for performance optimization by manipulating the AST structure but this will come later.

Google closure compiler

Initially, I was using the Google closure compiler to minify the release version. However, this tool takes too much liberty on the initial code (such as changing the functions' signature) and I ended up choosing another tool.

UglifyJS and wscript

Now I am using UglifyJS2 to generate the final release version. I opened an issue because the code is not compatible with cscript but I ended up developing my own fix.

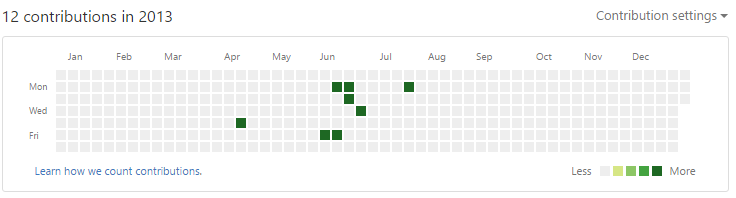

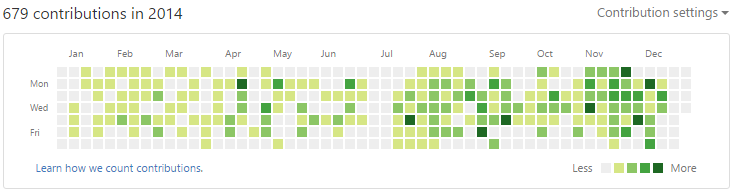

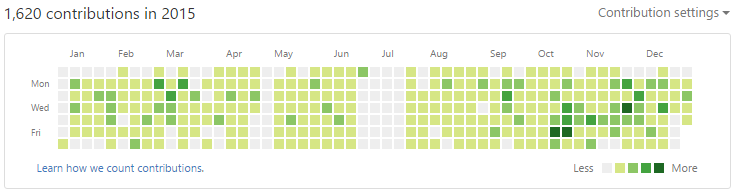

Time management

I often got the same question: "how do you find the time to work on this project?"

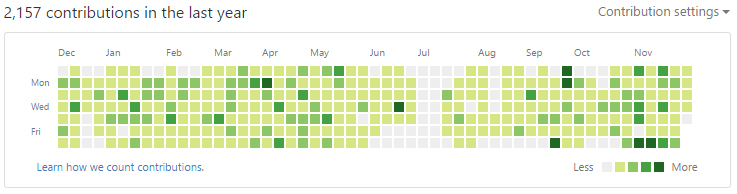

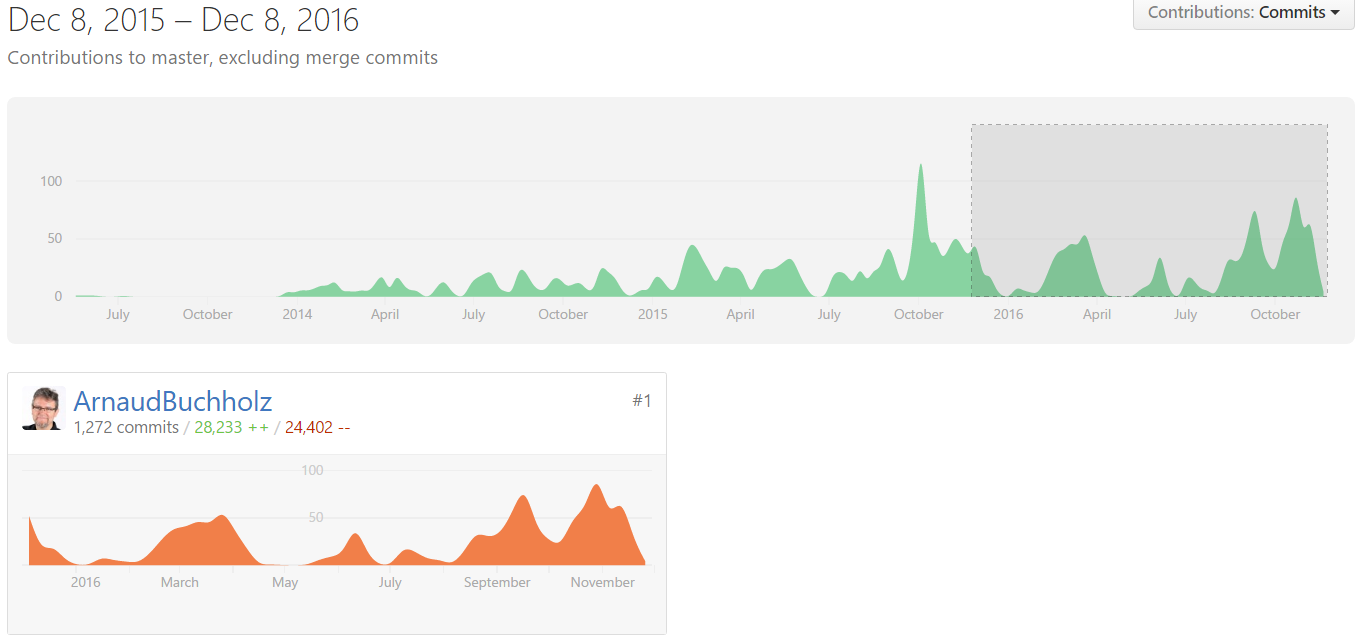

GitHub provides lots of statistics regarding how much I worked over the last years...

I force myself to push at least one file or issue every day but, in the end, I don't spend lot of time. Over the years, I found the proper balance between my personal life, my job and my projects.

I take care of pushing each little change individually. I estimate that each change requires a maximum of 5 minutes. Over the last year, because this is not the only project I worked on, I probably spend over 250 days on the library.

So it means I almost did 5 pushes by day which represents an average 25 minutes of work every day (but the graph shows that it is far from linear).

I guess the secret is "interruptibility": the ability to pause what you are doing and resume it later without losing the focus.

What's next

I started to plan the releases more carefully: I write stories and I document the bugs. I also maintain a backlog.

The next versions will focus on putting back existing code into the library, this includes:

- classes

- interfaces

- attributes

- parsing helpers

In a near future, I would like to provide sample codes in the documentation: ideally, this would be based on the tests.

More cool stuff will come soon so stay tuned!

No comments:

Post a Comment