This release increases quality, it provides a new streaming tool and it introduces attributes.

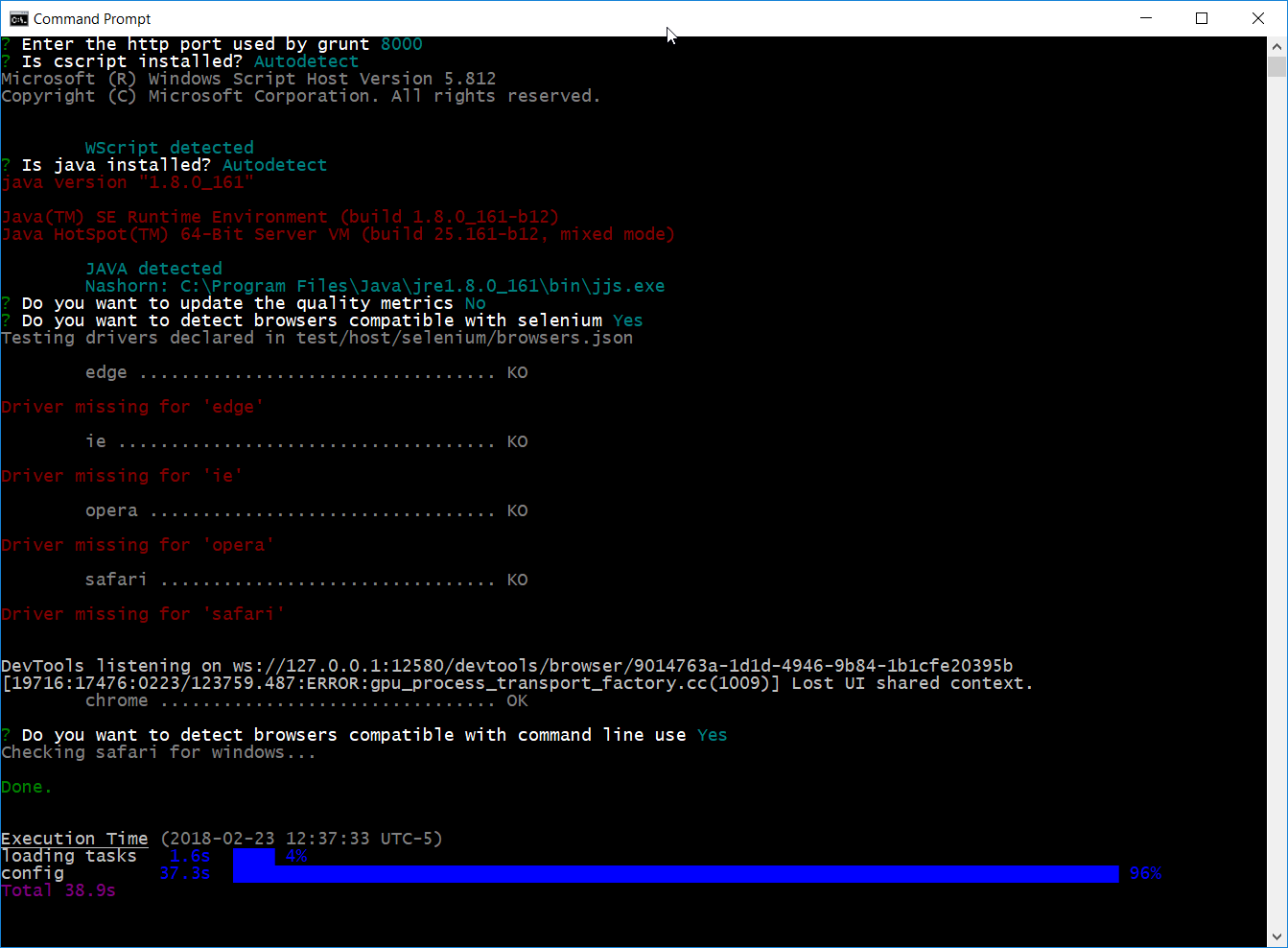

Last but not least, a new scriping host was added to the supported list: Nashorn.

This release increases quality, it provides a new streaming tool and it introduces attributes.

Last but not least, a new scriping host was added to the supported list: Nashorn.

New version

Released right on time, here comes the new version:

- Stories and bugs implemented

- Sources

- Documentation

- NPM package

Release content

Leftovers on gpf.require.define

When writing the article My own require implementation, the handling of JavaScript modules was split in two distinct parts:

- CommonJS handling (mostly because of synchronous requires)

- AMD / GPF handling (because asynchronous)

Furthermore, 'simple' CommonJS modules (i.e. no require with just exports) were not supported.

Those problems were addressed by fixing the issue #219.

Quality focus

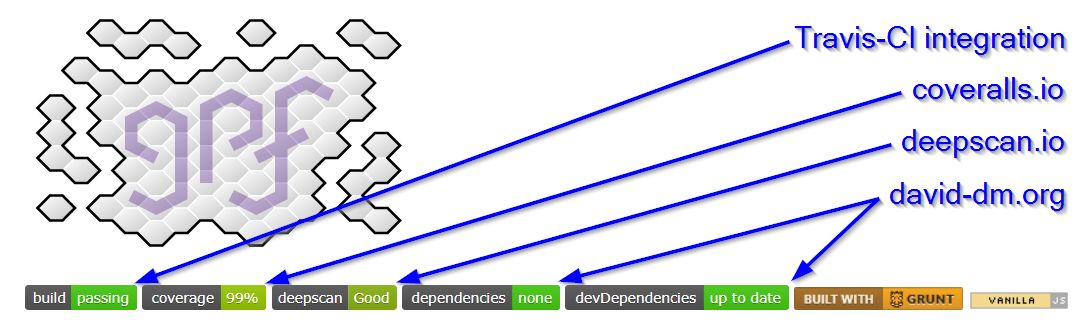

A significant effort was put in quality:

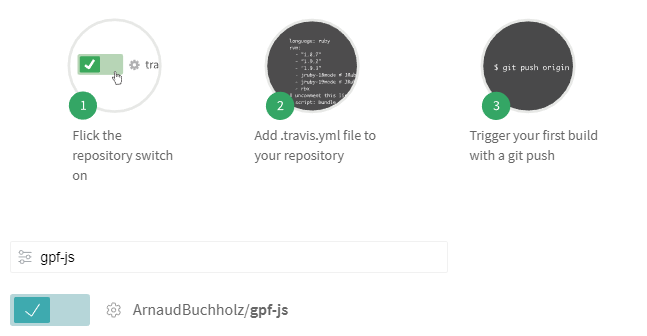

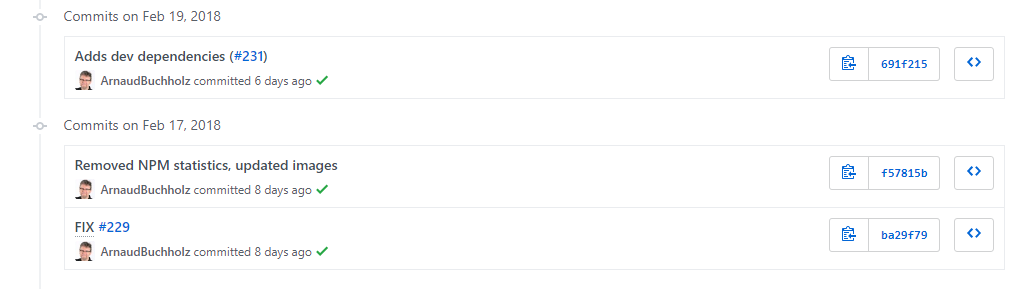

- Travis-CI, a continuous integration platform, is enabled

- This leaded to Linux testing and WScript simulation for non-Windows environments

- A code coverage report is updated on each build

- A code quality tool is monitoring the sources

- Project dependencies are verified

This is all explained in the article Travis integration.

Nashorn support

Nashorn is another JavaScript engine implemented in Java. It's like Rhino's little brother: it's faster and delivered with Java Runtime Environment.

This new Java host made me realize that the gpf.rhino namespace was badly named. it has been deprecated it to the benefit of the gpf.java namespace.

Filtering stream

Previous release introduced gpf.stream.pipe to chain streams together.

This release delivers a filtering processor that forwards or blocks data based on a filtering function. Here is a modified version of the previous release's sample that includes filtering:

// Reading a CSV file and keep only some records

var csvFile = gpf.fs.getFileStorage()

.openTextStream("file.csv", gpf.fs.openFor.reading),

lineAdapter = new gpf.stream.LineAdapter(),

csvParser = new gpf.stream.csv.Parser(),

filter = new gpf.stream.Filter(function (record) {

return record.FIELD === "expected value";

}),

output = new gpf.stream.WritableArray();

// csvFile -> lineAdapter -> csvParser -> filter -> output

gpf.stream.pipe(csvFile, lineAdapter, csvParser, filter, output)

.then(function () {

return output.toArray();

})

.then(function (records) {

// process records

});

Attributes

Back to 2016, an article to introduce GPF-JS was written and it explains the concepts that were expected in the library: classes, interfaces and attributes.

The last pillar is introduced in this release.

It all starts by its simpler aspects:

- The gpf.attributes.Attribute base class

- The possibility to specify attributes in a class definition (not yet documented but check the tests)

- An API to extract attributes from a class

More features will come soon but this rewriting is very exciting as it fixes all the issues of the initial draft (developed in 2015).

Improved development environment

It is now possible to use an authentication token to connect to the GitHub platform (previously user and password were required). It opens the door for a new tile in the dashboard to speed up development.

This is already enabled in the release process which has also been improved to prepare the following version in its final step. Unfortunately, the command line failed when testing it on this release and the remaining steps were finished manually

...which demonstrates how much value automation brings in this procedure...

Lessons learned

If it's not tested, it doesn't work. This was confirmed with Travis integration where the tools were run for the first time on a non-Windows environment.

The build process is taking longer, for two reasons:

- There is a new host to test (Nashorn)

- The library now has 9 legacy test files

A strategy will be decided to reduce the number of tests being run.

With the integration of Deepscan.io, a lot of cleaning has been applied because there is no easy way to exclude specific files from the tool (other than naming them one by one). All the unused files were moved from src & test to the new lost+found folder.

Next release

The library becoming more complex (and bigger), it might evolve to propose several 'flavors'. The goal would be to provide smaller / dedicated versions that cut in the feature set.

For instance, a modern browser version could see the file system management removed as well as the compatibility layer.

Today, the dependencies.json file enumerates module dependencies. To be able to isolate them, a more accurate view is required and some modifications must be done inside the sources.json file.

The next release content will mostly focus on:

- Documenting attributes

- Refining the WScript simulation

- Improving the development framework (illustrate the versions)

- Adding a new stream helper to transform records

This article summarizes how Travis CI was plugged into the GPF-JS GitHub repository

to assess quality on every push.

This article summarizes how Travis CI was plugged into the GPF-JS GitHub repository

to assess quality on every push.