The need for HTTP requests

In a world of interoperability, internet of things and microservices, the - almost 30 years old - HTTP protocol defines a communication foundation that is widely known and implemented.

Originally designed for human-to-machine communication, this protocol also supports machine-to-machine communication through standardized concepts and interfaces:

Evolution of HTTP requests in browsers

Web browsers were the first applications implementing this protocol to access the World Wide Web.

Netscape communicator loading screen

Before AJAX was conceptualized, web pages had to be fully refreshed from the server to reflect any change. JavaScript was used for simple client manipulations. From a user experience point of view, it was OK (mostly because we had no other choices) but this limited the development of user interfaces.

I guess

Then AJAX introduced new ways to design web pages: only the new information could be fetched from the server without reloading the page. Therefore, the pages were faster, crisper and fully asynchronous.

However, each browser had its own implementation of AJAX requests (not mentioning DOM, event handling and other incompatibilities). And that's why jQuery, which was initially designed to offer a uniform API that would work identically on any browser, became so popular.

jQuery everywhere

Today, the situation has changed: almost all browsers are implementing the same APIs and, consequently, modern libraries are considering browsers to be one environment only.

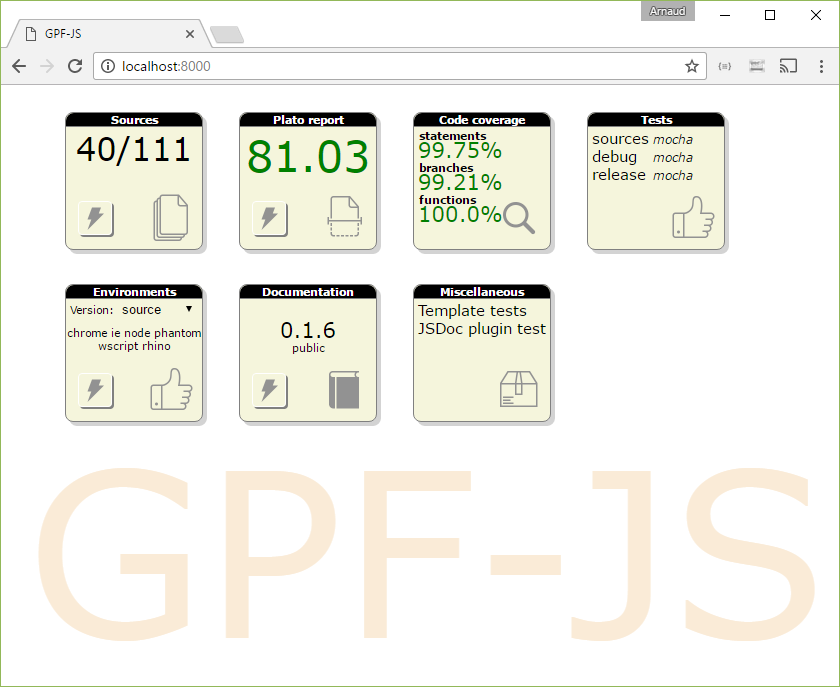

GPF-JS

GPF-JS obviously supports browsers and it leverages AJAX requests to implement HTTP requests in this environment. But the library is also compatible with NodeJS as well as other - less common - command line hosts:

Designing only one API that is compatible with all these hosts means to deal with each host specificities.

How to test HTTP request

When you follow the TDD practice, you write tests before writing any line of production code. But in that case, the first challenge was to figure out how the whole HTTP layer could be tested. Mocking was not an option.

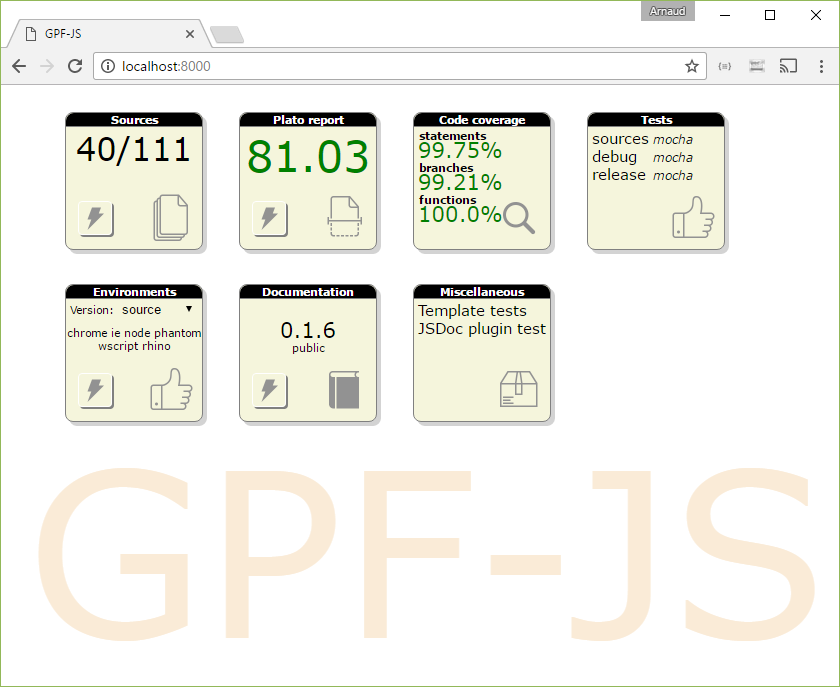

The project development environment heavily relies on the grunt connect task to deliver the dashboard: a place where the developer can access all the tools (source list, tests, documentation...).

dashboard

As a lazy developer, I just need one command line for my development (grunt). Then all the tools are available within the dashboard.

Some middleware is plugged to add extra features such as:

- cache: introduced with version 0.1.7, it is leveraged by the command line used to test browsers when Selenium is not available. It implements a data storing service like Redis.

- fs: a file access service used to read, create and delete files within the project storage. For instance, it is used by the sources tile to check if a source has a corresponding test file.

- grunt: a wrapper used to execute and format the log of grunt tasks.

Based on this experience, it became obvious that the project needed another extension: the echo service. It basically accepts any HTTP request and the response either reflects the request details or can be modified through URL parameters.

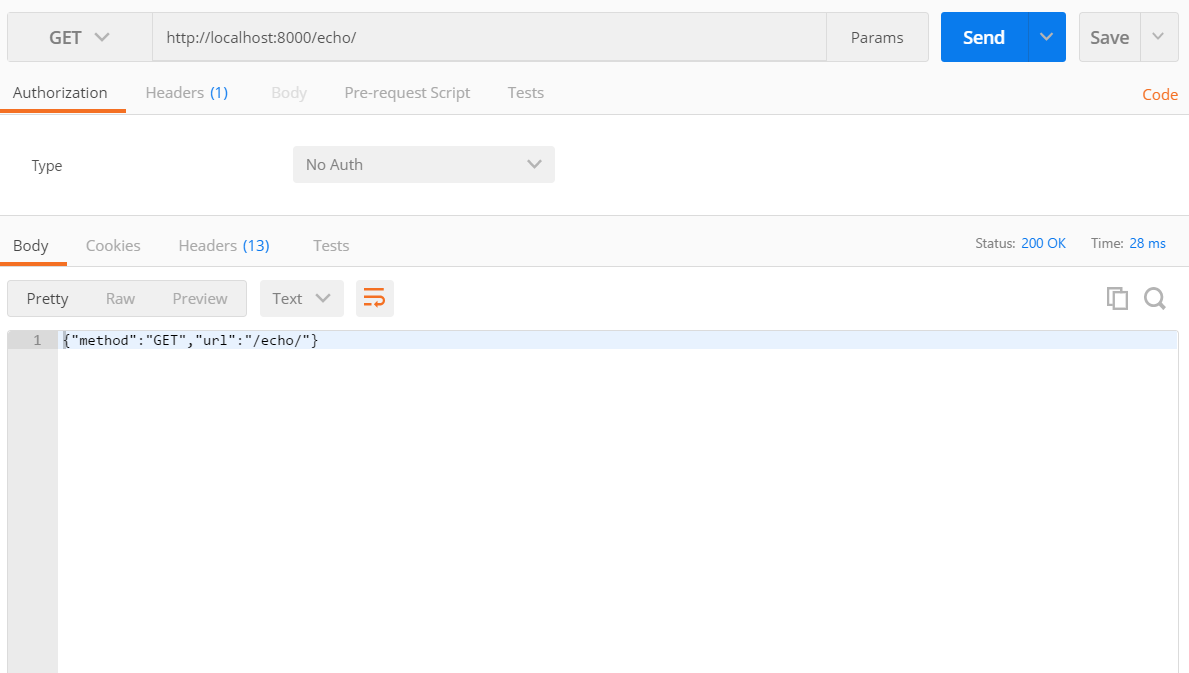

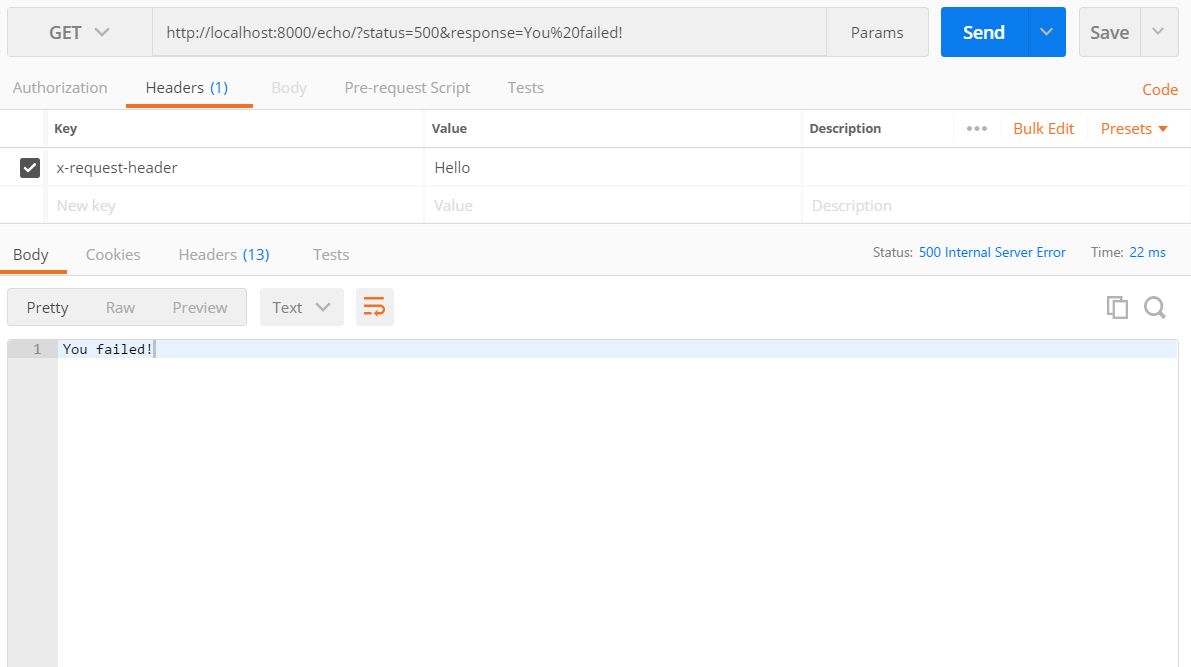

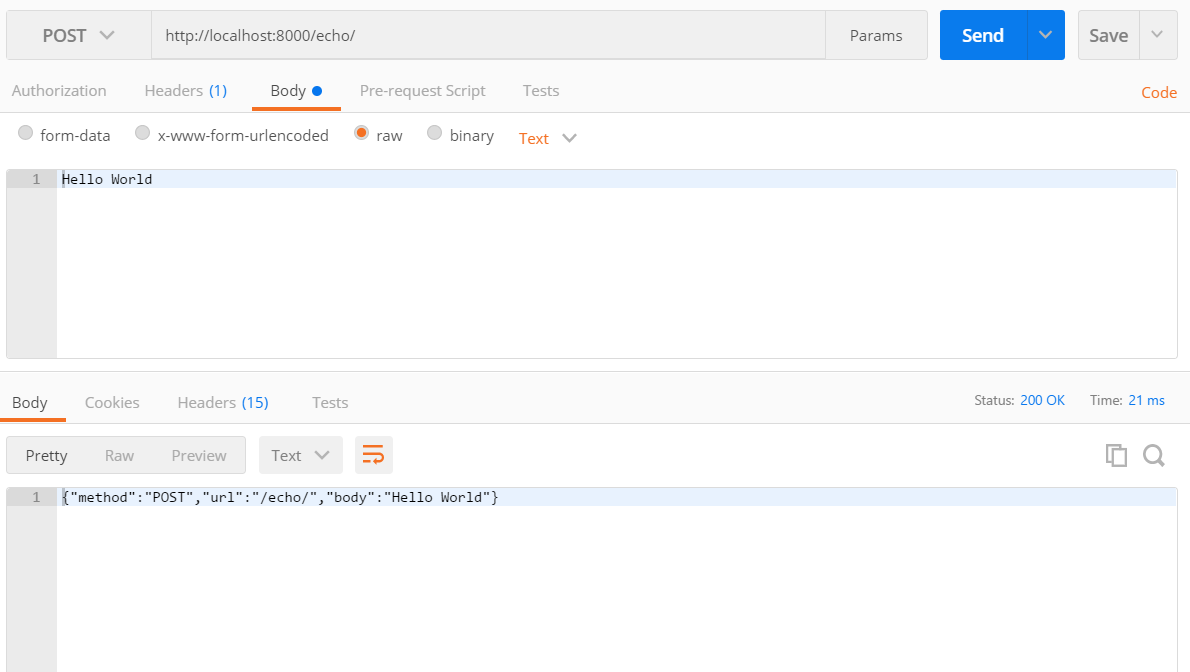

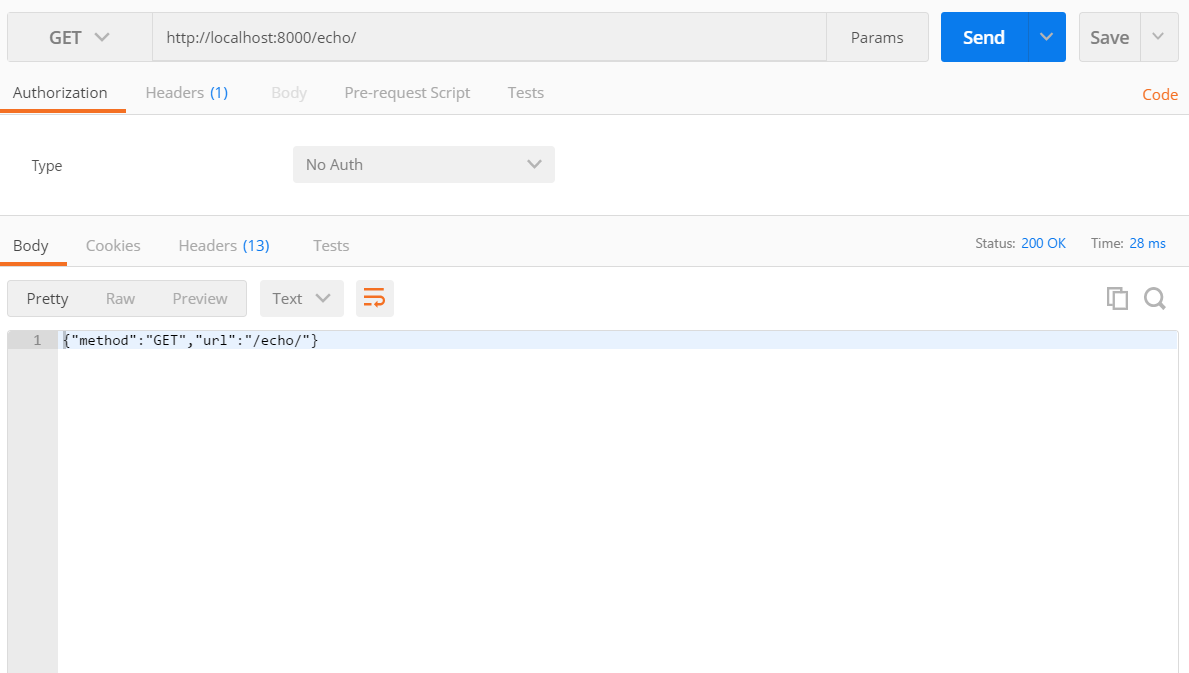

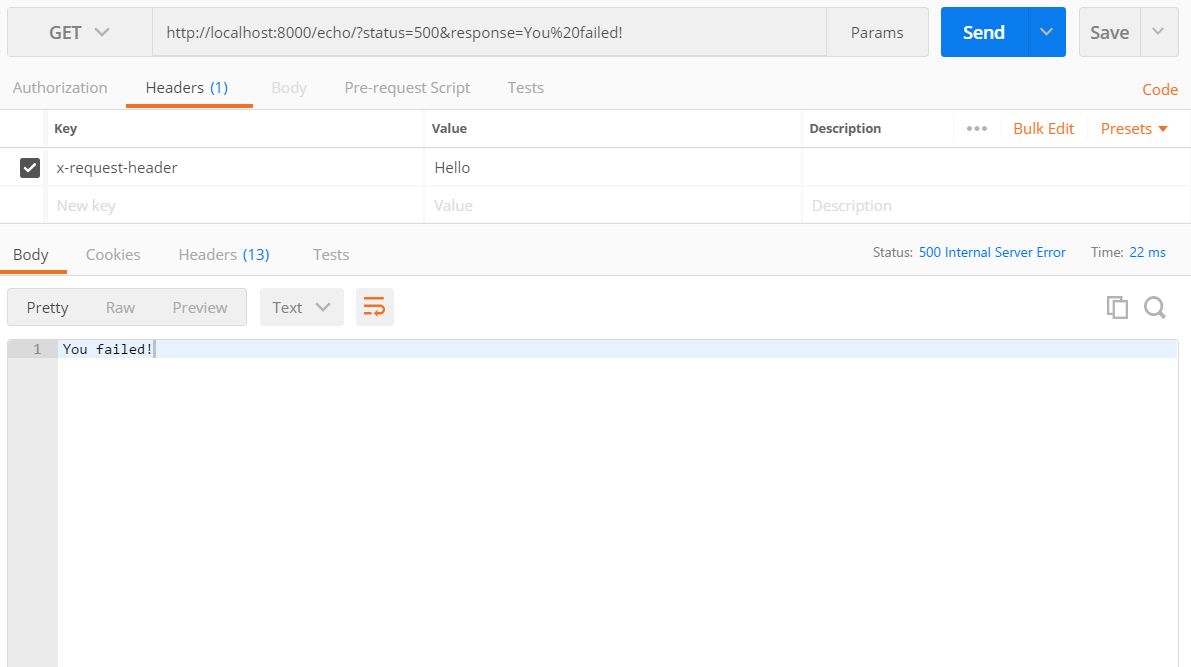

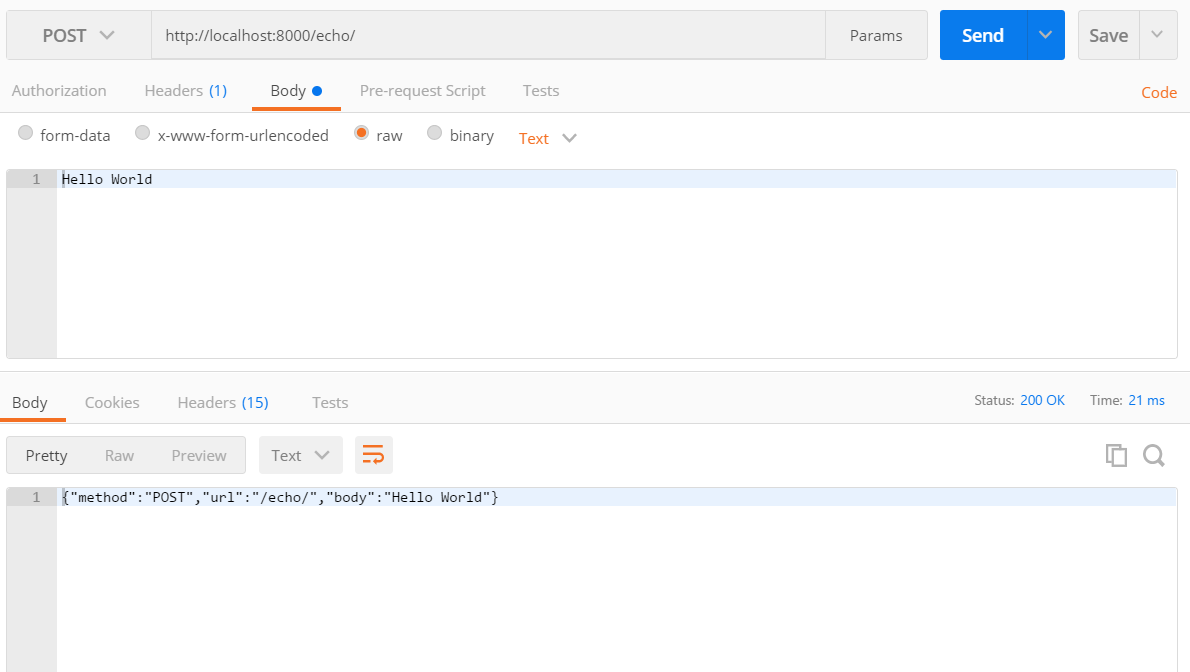

POSTMAN was used to test the tool that will be used to test the HTTP layer...

GET

GET 500

POST

One API to rule them all

Now that the HTTP layer can be tested, the API must be designed to write the tests.

Input

An HTTP request starts with some parameters:

- The Uniform Resource Locator which determines the web address you want to send the request to. There are several ways to specify this location: NodeJS offers an URL class which exposes the different parts of it (host, port ...). However, the simplest representation remains the one everybody is used to: the string you can read inside the browser location bar.

- The request method (also known as verb) which specifies the kind of action you want to execute.

- An optional list of header fields meant to configure the request processing (such as specifying the expected answer type...). The simplest way to provide this list is to use a key/value dictionary, meaning an object.

- The request body, mostly used for POST and PUT actions, which contains that data to upload to the server. Even if the library supports the concept of streams, most of the expected use cases imply sending an envelope that is synchronously built (text, JSON, XML...). Also, JavaScript (in general) is not good at handling binary data, hence a simple string is expected as a request body.

This leads to the definition of the httpRequestSettings type.

Output

On completion, the server sends back a response composed of:

- A status code that provides feedback about how the server processed the request. Typically, 200 means everything went well. On the contrary, 4xx messages signal an error and 500 is a critical server error.

- A list of response headers. For instance, this is how cookies are transmitted by the server to the client (and, actually, they are also sent back by the client to the server through headers).

- The response body: depending on what has been requested, it will contain the server answer. This response could be deserialized using a readable stream. But, for the same reasons, a simple string containing the whole response text will be returned.

This leads to the definition of the httpRequestResponse type.

If needed, the API may evolve later to introduce the possibility to use streams.

Waiting for the completion

An HTTP request is asynchronous; hence the client must wait for the server to answer. To avoid the callback hell, a Promise is used to represent the eventual completion of the request.

This leads to the definition of the gpf.http.request API.

The promise is resolved when the server answered, whatever the status code (including 500). The only way the promise would be rejected is when something wrong happened during communication.

Shortcuts

For simple requests, such as a GET with no specific header, the API must be easy to use. Shortcuts are defined to shorten the call, for instance:

gpf.http.get(baseUrl).then(function (response) {

process(response.responseText);

}, handleError);

See the documentation.

Handling different environments

Inside the library, there are almost as many implementations as there are supported hosts. Each one is inside a self-titled file below the http source folder. This will be detailed right after.

Consequently, there are basically many ways to call the proper implementation depending on the host:

- Inside the request API, create an if / else condition that checks every possibility

gpf.http.request = function () {

if (_GPF_HOST.NODEJS === _gpfHost) {

} else if (_GPF_HOST.BROWSER === _gpfHost) {

} else

};

- Have a global variable receiving the proper implementation, using an if condition inside each implementation file

if (_GPF_HOST.NODEJS === _gpfHost) {

_gpfHttpRequestImpl = function () {

};

}

gpf.http.request = function () {

_gpfHttpRequestImpl();

};

- Create a dictionary indexing all implementations per host and then fetch the proper one on call

_gpfHttpRequestImplByHost[_GPF_HOST.NODEJS] = function () {

};

gpf.http.request = function () {

_gpfHttpRequestImplByHost[_gpfHost]();

};

My preference goes to the last choice for the following reasons:

- if / else conditions generate cyclomatic complexity. In general, the less if the better. In this case, they are useless because used to compare a variable (here the current host) with a list of predefined values (the list of host names). A dictionary is more efficient.

- It is simpler to manipulate a dictionary to dynamically declare a new host or even update an existing implementation. Indeed, we could imagine a plugin mechanism that would change the way requests are working by replacing the default handler.

Consequently, the internal library variable _gpfHttpRequestImplByHost contains all implementations indexed by host name. The request API calls the proper one by fetching the implementation at runtime.

Browsers

As explained in the introduction, browsers offer AJAX requests to make HTTP Requests. This is possible through the XmlHttpRequest JavaScript class.

There is one major restriction when dealing with AJAX requests. You are mainly limited to the server you are currently browsing. If you try to access a different server (or even a different port on the same server), then you are entering the realm of cross-origin requests.

If needed, you will find many examples on the web on how to use it. Long story short, you can trigger a simple AJAX request in 5 lines of code.

In terms of processing, it is interesting to note that, once triggered from the JavaScript code, the network communication is fully handled by the browser: it does not require the JavaScript engine. This means that the page may execute some code while the request is being transmitted to the server as well as while waiting for the response. However, to be able to process the result (i.e. trigger the callback), the JavaScript engine must be idle.

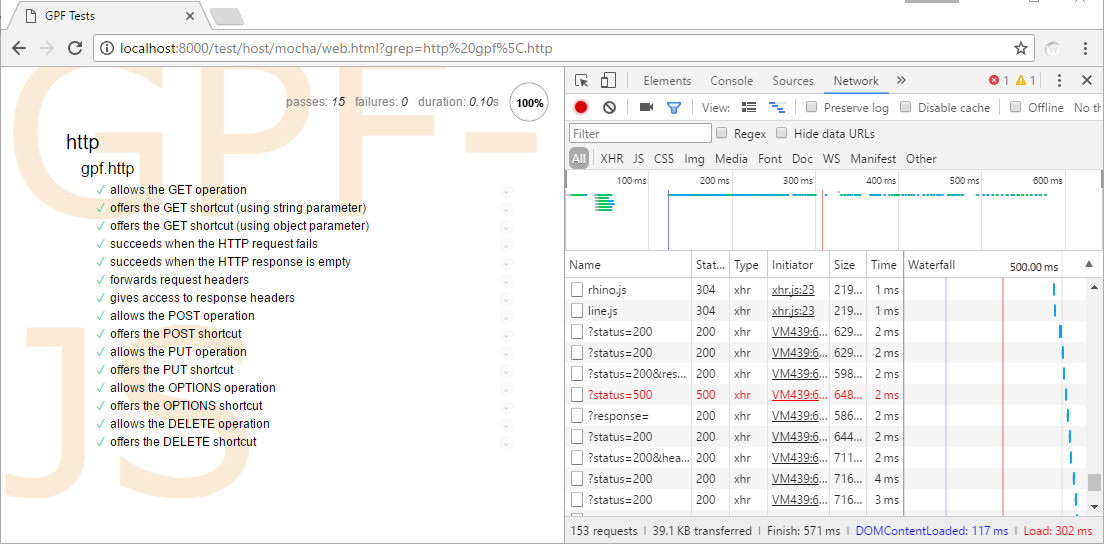

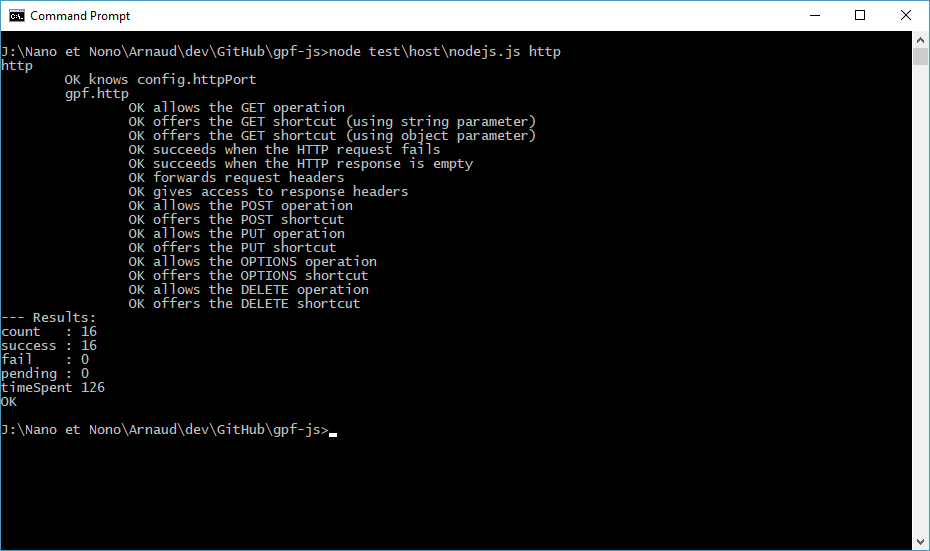

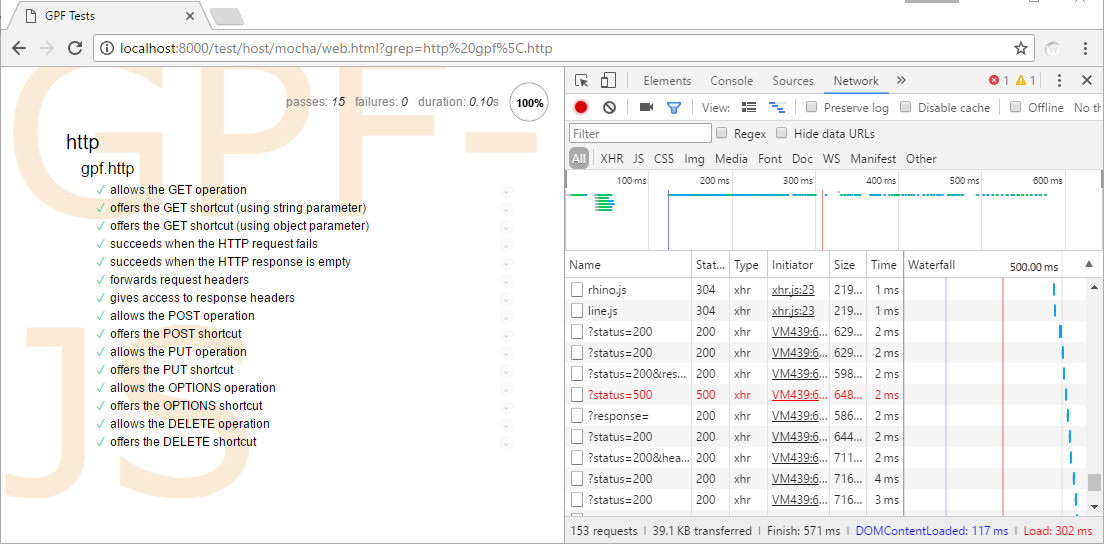

Test preview

Browser implementation is done inside src/http/xhr.js.

Two external helpers are defined inside src/http/helpers.js:

Setting the request headers and sending request data are almost done the same way for three hosts. To avoid code duplication, those two functions generates specialized versions capable of calling host specific methods.

NodeJS

Besides being a JavaScript host, NodeJS comes with a complete set of API for a wide variety of tasks. Specifically, it comes with the http feature.

But unlike AJAX requests, triggering an HTTP requests requires more effort than in a browser.

The http.request method allocates an http.ClientRequest. However, it expects a structure that details the web address. That's why the URL parsing API is needed.

The clientRequest object is also a writable stream and it exposes the method to push data over the connection. Things being done at the lowest level, you are responsible of ensuring the consistency of the request details. Indeed, it is mandatory to set the request headers properly. For instance, forgetting the Content-Length specification on a PUSH or a PUT will lead to the HPE_UNEXPECTED_CONTENT_LENGTH error. The library is taking care of that part.

The same way, the response body is a readable stream. Fortunately, GPF-JS provides a NodeJS-specific stream reader and it deserializes the content inside a string using _gpfStringFromStream.

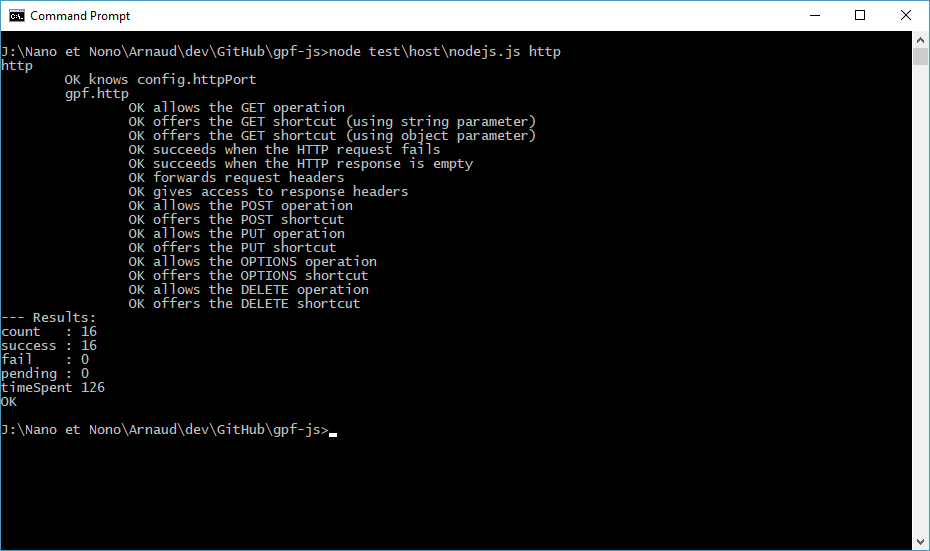

Test preview

NodeJS implementation is done inside src/http/nodejs.js.

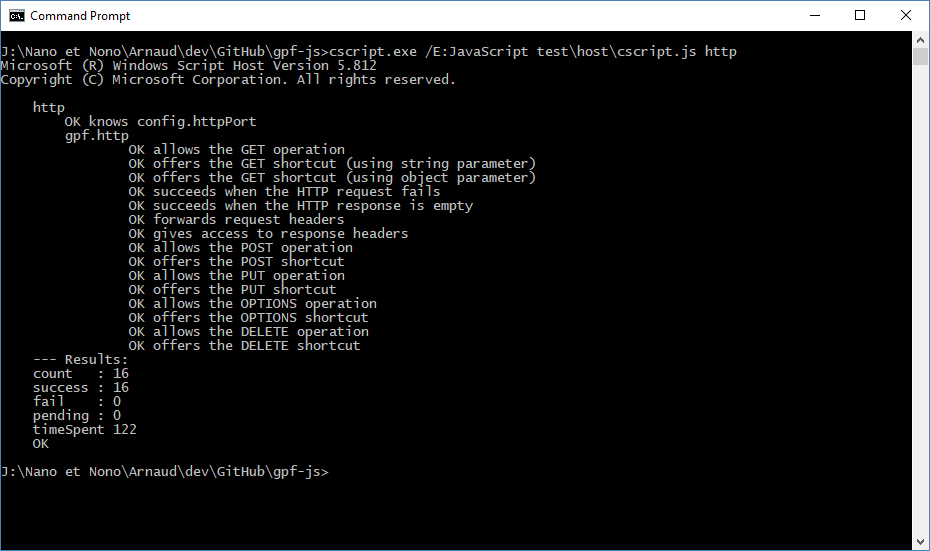

WScript

WScript is the scripting host available on almost all post Windows XP Microsoft Windows operating systems. It comes in two flavors:

- WScript.exe which is showing outputs in dialog boxes

- cscript.exe which is the command line counter part

This host has a rather old and weird support of the JavaScript features. It does not supports timers but GPF-JS provides all the necessary polyfills to compensate for the missing APIs.

Despite all these troubles, it has one unique advantage over the other hosts: it offers the possibility to manipulate COM components.

Indeed, the host-specific class ActiveXObject gives you access to thousands of external features within a script:

For instance, few years ago, I created a script capable of reconfiguring a virtual host to fit user preferences and make it unique on the network.

Among the list of available objects, there is one that is used to generate HTTP requests: the WinHttp.WinHttpRequest.5.1 object.

Basically, it mimics the interface of the XmlHttpRequest object with one significant difference: its behavior is synchronous. As the GPF API returns a Promise, the developer does not have to care about this difference.

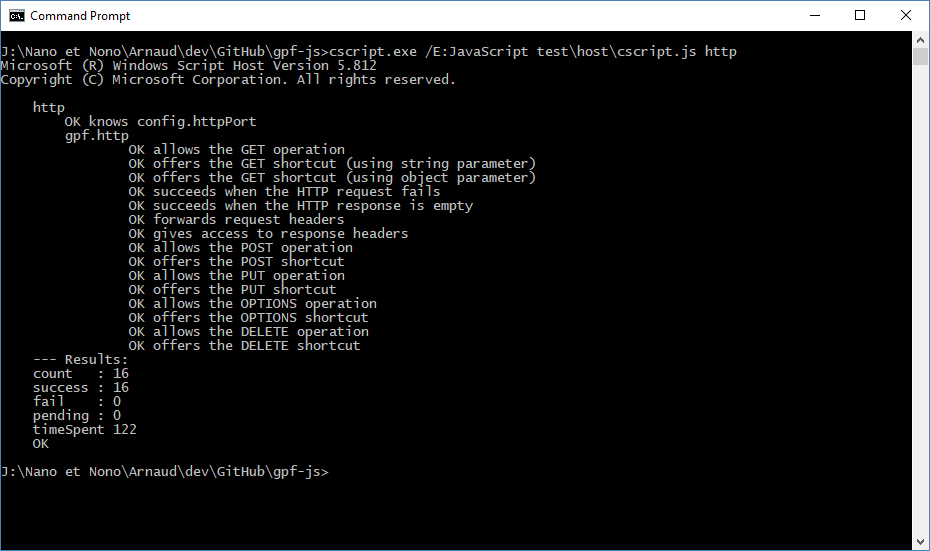

Test preview

Wscript implementation is done inside src/http/wscript.js.

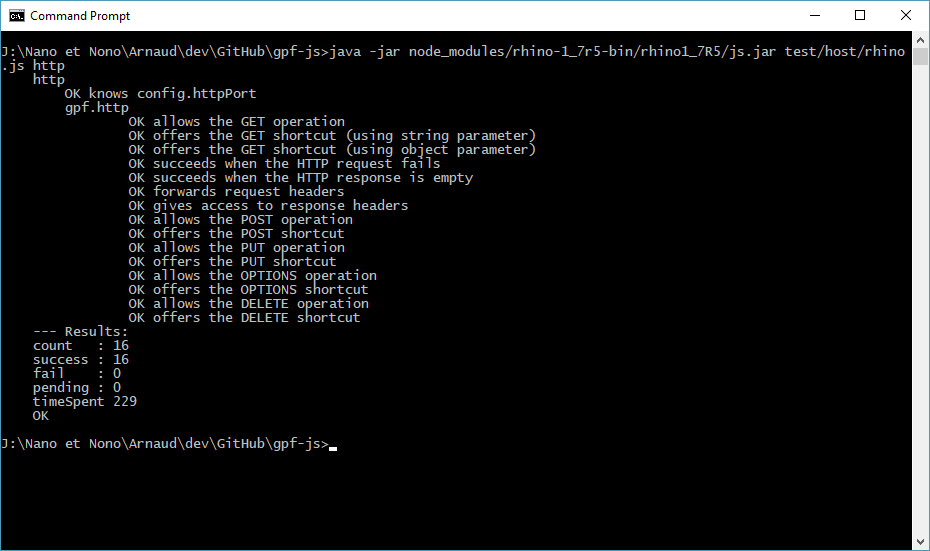

Rhino

Rhino is probably one of the most challenging - and fun - environment because it is based on java.

The fascinating aspect of Rhino comes from the tight integration between the two languages. Indeed, the JavaScript engine is implemented in java and you can access any java class from JavaScript. In terms of language support, it is almost the same than WScript: no timers and written on a relatively old specification. Here again, the polyfills are taking care of filling the blanks.

To implement HTTP requests, one have to figure out which part of the java platform would be used. After doing some investigations (thanks Google), the solution appeared to be the java.net.URL class.

Like NodeJS, java streams are used to send or receive data over the connection. Likewise, the library offers rhino-specific streams implementation.

Stream reading works by consuming bytes. To read text, a java.util.Scanner instance is used.

Surprisingly, if the status code is in the 5xx range, then getting the response stream will fail and you have to go with the error stream.

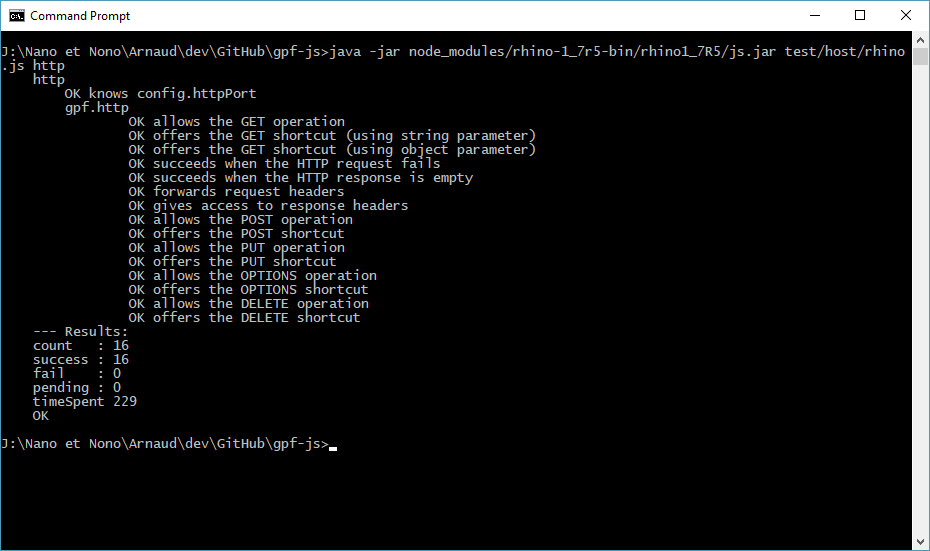

Test preview

Rhino implementation is done inside src/http/rhino.js.

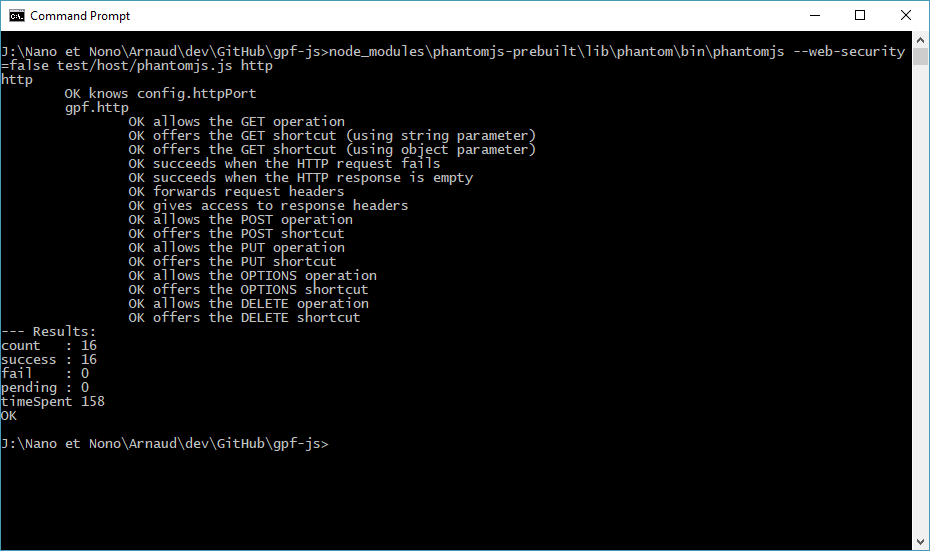

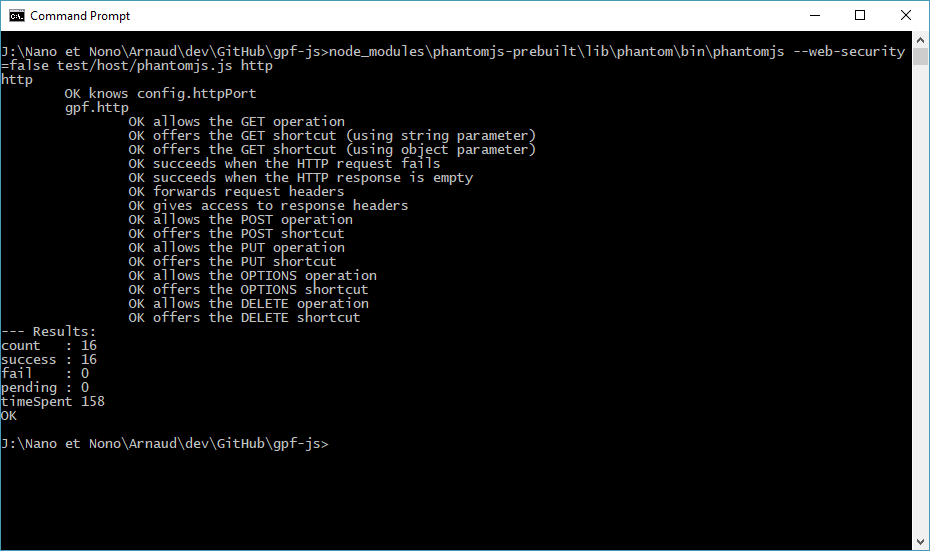

PhantomJS

To put it in a nutshell, PhantomJS is a command line simulating a browser. It is mainly used to script access to web sites and it is the perfect tool for test automation.

But there are basically two styles of PhantomJS scripts:

- On one hand, it browses a website and simulates what would happen in a browser

- On the other hand, it is a command line executing some JavaScript code

As a matter of fact, GPF-JS uses those two ways:

- mocha is used to automate browser testing with PhantomJS

- a dedicated command line runs the test suite without any web page

As a result, in this environment, the XmlHttpRequest JavaScript class is available.

However, like in a browser, this host is also subject to security concerns. Hence you are not allowed to request a server that is not the one being opened.

Luckily, you can bypass this constraint using a command line parameter: --web-security=false.

Test preview

PhantomJS implementation is done inside src/http/xhr.js.

Conclusion

If you survived the whole article, congratulations (and sorry for the broken English).

Now you might be wondering...

What's the point?

Actually, this kind of challenge satisfies my curiosity. I learned a lot by implementing this feature and, actually, it was immediately applied to greatly improve the coverage

measurement.

Indeed, each host is tested with instrumented files and the collected coverage data is serialized to be later consolidated and reported on. However, as of today, only two hosts supports file storage: NodeJS and WScript. But thanks to the HTTP support, all hosts are sending the coverage data to the fs middleware so that it generates the file.

Q.E.D.

This new release focuses on streams by offering two new tools: a way to pipe streams together (and simplify their

use) and a CSV parser.

This new release focuses on streams by offering two new tools: a way to pipe streams together (and simplify their

use) and a CSV parser.

A ninja is a lazy fighter. As Sun Tzu stated "Every battle is won or lost before it's ever fought".

Here are quick tips to simplify your development when dealing with optional parameters.

A ninja is a lazy fighter. As Sun Tzu stated "Every battle is won or lost before it's ever fought".

Here are quick tips to simplify your development when dealing with optional parameters.

The version 0.2.1 of the GPF-JS library delivers an HTTP request helper that can be used on all supported hosts.

It was quite a challenge as it implied 5 different developments, here are the details.

The version 0.2.1 of the GPF-JS library delivers an HTTP request helper that can be used on all supported hosts.

It was quite a challenge as it implied 5 different developments, here are the details.