Since I work for a big company, I must explain that I take the entire responsibility for the information and views that are set out in this article. Also, "Back to the Future" pictures were gathered from the web. They are copyrights of Universal City Studios, Inc. and U-Drive Joint Venture.

Happy New Year 2016!

2015 was already the future, imagine what will happen in 2016!

First of all, I'd like to wish you a happy new year 2016! May your lives, studies, careers, projects and everything that makes sense to you be successful.

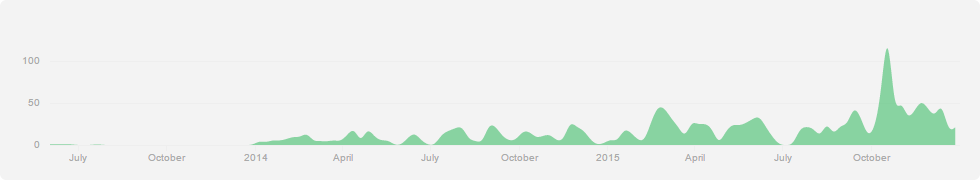

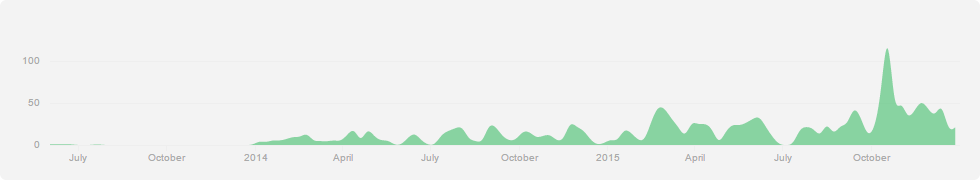

This is a good time to look back and ponder on what happened to take new resolutions for the coming year. Talking about me, I would like to present you a personal project that I have been working on for the last 2 years and that was greatly improved over the last months.

GPF-JS GitHub contributions

First, let's set the context

This is a pretty long story but I will try to make it short for the sake of your network bandwidth.

Work experience with JavaScript

If you look into my profile, you will read that my longest experience was in a French company as a software engineer and this is where I learnt most of what I know today regarding coding. In the beginning (i.e. 15 years ago), we were using JavaScript as a middleware language to glue C/C++ components together. Because I was surrounded by skilled people, advanced notions like prototype inheritance and code generation were known and wisely used.

Moving to the cloud, the company created an ASP.NET web application and scripting was used to implement small behaviors as the HTML was generated on server side.

But in the last years, I witnessed the growing importance of JavaScript as it was used both on Back-End and Front-End.

Personal experience with JavaScript

I would not pretend I had a natural preference for this language: I have also coded in C, C++, Pascal (... remember Delphi?), VBA (yuck!), C# and a little bit of Java...

However, I quickly identified three main advantages of the JavaScript language over the other ones:

- No specific IDE or compilation is needed: use notepad to edit the source and run the file. There is no better way to quickly develop... and this is what I did for a very long time.

- Compatibility: within browsers, JavaScript is available almost everywhere. I will discover later that NodeJS also provides compatibility through command lines.

I decided to invest my personal time learning more about the language.

You want me to buy a subscription to the 'JavaScript Journal'?

Because I am a lazy developer, I created a series of command line tools (using cscript) to automate some of my tasks. This later became a unified toolkit that was adopted by several teams to help them in their daily job.

Also, as I am curious and my son likes Pokemon, I started to create a card game simulator.

From the tools I obtained a collection of JavaScript helpers (consolidated in a library), a good understanding of the language basics and a nice sense of debugging with printouts.

At that time, debugging cscript was so heavy that I used outputs on the console to understand the issues. This forced me to analyze and understand the problems instead of 'seeing' them in a debugger.

On the other hand, the game helped me to experiment object oriented programming with JavaScript and all the troubles (...I mean fun stuff...) related to web development.

So far, I had not been exposed to any framework: I started with jQuery on the game development but I quickly realized I was using only the query selector API. I have to say that it has been a chance: I learned all the basics of web development with vanilla JavaScript.

Canadian experience with JavaScript

Then, I had to quit my job to move to Canada.

It's cold out there

As I have been quite successful with my card game simulator, I found a job as a web developer in a startup. This is where I discovered ExtJS and its build process. I also got familiar with NodeJS by creating tools to analyze the code or to mock the server.

Later I moved to another company that was relying on AngularJS. Quickly, I became fluent with the framework and the tooling associated to the web development (such as grunt).

Now I work for a big company where I use my JavaScript expertise to build cloud solutions.

The idea

From these experiences, I learned a lot. I have also been frustrated because I knew I was only scratching the surface of something bigger. When you look at the number of libraries and frameworks that exist now, you realize how much the JavaScript language is versatile and powerful. During my researches, I had some ideas that I wanted to put in action in order to see if they would really be helpful or valuable.

The idea

Basically, my flux capacitor is composed of:

So I started to develop a library (not a framework, reasons detailed below) that would help me implement these.

Because it is my own project, I also wanted to come through the following challenges:

Building a library

Framework vs library

First of all, I would like to clarify my vision of the difference between a framework and a library.

Please note that it is a personal interpretation based on my experience. Don't read it as a universal definition to all existing frameworks or libraries.

On one hand, a framework is designed to simplify complex tasks with the objective of building a given result (such as a single page application). Usually, it helps you building something faster but at the cost of forcing the developer to understand and apply its specific logic. As soon as you want to escape from the expected structure, things are getting ugly. This last sentence is obviously moderated by the capacity of the framework to accepts these changes but some simply don'). In that sense, ExtJS and AngularJS are frameworks.

On the other hand, a library solves a problem with a narrowed scope: it doesn't try to do everything. In that sense, jQuery and backbone.js are libraries.

Looking at what I want to achieve, I am trying to create a collection of helpers to simplify the JavaScript development in general.

Organizing sources

Let's rock!

The very first library I created in JavaScript was a big mess (script.lib.js, for those who still use it... sorry):

- Everything was written in a single - huge - file... maintained on my drive (versioning was done by zipping the file and renaming it manually)

- I had no clue about the minification tools so I named my variables with single letters and a comment to know what they were used for (the worst thing to do). Then, a simple command line was stripping the comments as well as the unnecessary formatting characters to generate the 'release' build.

For instance, I wrote: function capitalize(s) {

return s[0].toUpperCase() + s.substr(1);

}

To generate: function capitalize(s) {return s[0].toUpperCase()+s.substr(1);}

- I had no clear understanding of private scoping so everything was created globally (and easily 'hackable')

I learned by making mistakes and, after several attempts, I came up with a better organization:

- Features are grouped by modules, a module is a single JavaScript source file (for instance: error)

- Modules are not using any private context (using classical IIFE), this is injected afterward by the build process (see next part)

- Each module may import and export 'internals': the linters global & exported keywords are leveraged to consolidate (and check) the list of dependencies and update the documentation within each module (this is done with make/globals.js)

- The list of modules is consolidated in a unique source file: a tag decides which modules are in the library.

I even wrote a coding guideline!

Minification & build process

When you develop a library, you must think about its publication (or at least the way you want to use it): ideally, you produce a single file that has been minified (to reduce its size) and possibly obfuscated (you may want to protect some of your code).

A quick note about obfuscation: it is impossible to fully obfuscate the JavaScript code. At least, you can make it really hard to read for someone who wants to reverse engineer it but, in the end, the browser needs a valid JavaScript source to run it.

Once the sources are organized, a workflow must be set up to concatenate them and generate the result.

A long time ago, I documented my thoughts in an article about Release and debug versions.

Also, you have to remember that when your library is composed of several files, you might want to be able to test it with the sources. Otherwise, it might be tricky to execute the 'compiled' version while changing the sources.

Here again, I made several mistakes regarding the build process:

- I did not automate this process from the beginning... this was addressed later using grunt (I will come to that later)

- I had troubles with the first results: how do you validate that the generated file works as expected? I had to build a complete test database (TDD!)

- I wanted to experiment conditional building (#ifdef) & optimizations (inlines - not done yet)

- Initially, I wanted to do the minification by myself... but how do you parse JavaScript? I first tried to parse it manually. Indeed, I have a working tokenizer (that is used for syntax highlighting) but the JavaScript grammar is complex. That's why I now rely on AST generation done by esprima. The structure is manipulated and the code rewrote using escodegen. However, the list of transformations to apply in order to reach a good ratio is huge... for a very small benefit if you think that some tools already exist. So I integrated the Google Closure Compiler to compress the release version.

The AST transformation is also used to inject the different modules inside a Universal Module Definition adapted for my needs. As a consequence, the library can be used in all the environment in the most natural way:

In end, the library comes in three flavors:

- The source version: done by loading the boot.js module

- The debug version: the non-minified concatenated-like version

- The release version: the minified & optimized concatenated-like version

JavaScript Development (with a capital D)

Linters

A linter is a tool that validates the code while you are typing it. It identifies common errors (undeclared or unused variables, missing semi-column characters... and many more) and preferences can be defined through configuration.

The first time I have been exposed to JSHint it was in a professional environment (my first Canadian experience). I was annoyed by the tool as it blocked most of my pushes to the repository. At that time, I was convinced that my code style was perfect and I saw the errors as meaningless (they were mainly styling errors).

But, after some time, I understood (and acknowledged) the two great advantages of using it:

- It eliminates common errors: as the JavaScript code does not require compilation to be used, this step checks the code. Otherwise, until the faulty line is evaluated, you have no clue about the error.

This is also why it is extremely important to make sure that every line of code is tested.

- When working in a team, this tool ensures that everybody applies the same formatting rules and conventions. You may also forbid specific syntaxes such as the use of the ternary operator. It is a way to produce uniform code (and it is also useful when working alone on a big project).

So I integrated JSHint at the beginning of 2014 and it actually took me a long time to adapt the code accordingly.

Sooner this year, I wrote an article about Code analysis.

Actually, I discovered ESLint after writing this article. I was interested in building custom rules (as you can read in the above link) and I checked what I could do with ESLint. This tool offers more options and it has a unique custom rule mechanism based on AST parsing. All rules are clearly documented and, for each of them, you may decide either:

- to ignore it

- to generate a warning

- to generate an error

To be honest, I am not convinced with the value of generating warnings if they are not blocking the build process. The only reason would be to raise attention to specific issues that can be addressed later but, in the end, you need to force the developer to act or you will suffer the broken window effect.

Enabling ESLint also took me a while because I had to review all rules to configure it and then modify the code (again).

Grunt

It is all about automation

As a lazy developer, I don't like to do the same thing twice. Hence, I try to automate my tasks as much as possible. It all started with DOS command lines but I was concerned about the portability of the project. When looking at free Continuous Integration platforms (like Travis CI), they expect standard tools to be configured.

So I started to integrate grunt in May 2015: I started with JSHint and then I automated the tests execution.

Now, these tasks are automated through Grunt:

- JSHint & ESLint code validation

- Code coverage evaluation and statistics update into the README.md with metrics.js

- Plato execution (see below)

- Tests execution (NodeJS, PhantomJS, Rhino, cscript)

- Build process (make.js)

- Publication to the public repository

By the way, I recommend reading Supercharging your Gruntfile.

I also learned how to generate the configuration 'on the fly'. Indeed, the power of Grunt comes from the fact that the configuration file (gruntfile.js) is a JavaScript file that is evaluated within NodeJS.

Some may protest that Gulp is way better than Grunt. I checked some articles (just google Grunt vs Gulp) but it reminds me the good old debates (Atari vs Amiga, Nintendo vs Sega, PC vs Console...). It may be true but Grunt does the job.

Test Driven Development

When this baby hits 88 miles per hours, you gonna see some serious s...

One of my last articles was about My own BDD implementation.

I won't repeat here the benefits of testing but it actually came late in my project.

I first started with a custom testing mechanism but it was mostly designed for the web: I was not happy with the NodeJS integration. Then I decided to integrate Mocha as it was for both (and integrated in grunt). But then I wanted a solution for the cscript host (and later Rhino). This is why I started my own BDD framework.

I was exposed to Jasmine and qUnit and, in the end, they all do the job properly. Most of the time, it is a matter of syntax and framework specific tools. These test frameworks are mature and well documented so I suggest you to check the website of each to see which one fits your needs.

Code coverage

The code is tested and, following Uncle Bob rules, only the required logic was implemented to pass the tests. One important question remains: is there any path in the code that was not executed during the tests?

Why is it critical? In my opinion, we are still talking about testing here. Indeed, I remember this simple rule: If it is not tested, it does not work. Eventually, the code you are developing will be used and, even if you extensively document the API, someone will find (or search) ways to reach this untested code.

Sadly, even if you have 100% code coverage, there are still ways to break the code. For instance, let's imagine a function that divides two numbers. In one test, you get 100% coverage. But what happens if you divide by 0? What happens if you divide by a very small number (will you have an overflow?). These are edge cases but they exist (and they probably should be handled by the function). The least we can do is to make sure that each line of code is executed at least once.

The only way to keep track of the different execution paths is to instrument the code and collect the information during the tests. As a result, an exhaustive report must be generated as well as an estimate of the code coverage.

Ideally one should target 100% of coverage but, in the real world, it is extremely hard to reach it. It is even more difficult if you handle different platforms (or hosts). Fortunately, the tool may propose ways to ignore some paths (with the risk you know).

The current code coverage (as of today) is:

- Statements: 90% (9% ignored)

- Branches: 88% (10% ignored

- Functions: 85% (14% ignored)

I had to develop several tricks to increase this coverage:

- Some 'internal' functions are exposed by the source version (through gpf.internal, for instance in string.js) to validate the algorithms

- I had to tweak the code coverage instrumentation because some of the functions are generated and this breaks the access to the coverage structure. It is done by the grunt task fixInstrument

Code analysis

The code is linted, tested and the code coverage is maximal.

What about the code quality? Can it be quantified or is there any way to objectively measure how easy the code will be to maintain?

Plato is a tool designed to visualize the code complexity.

The latest analysis done on the library shows an average maintainability around 73.

GitHub

Do I have to present GitHub? based on Git this free version control platform offers more than just a code repository: one can also store backlog items and issues.

Lessons learnt

The code base I have been working on was existing for two years and there was no real functional update to the library in 2015 (mostly improvements). As a matter of fact, it was more like a quality year. Indeed, the library has benefited from automation, testing and its complexity is now manageable.

I start this year knowing that I can now focus on features.

There would be much to say but this article is already long and if I take too much time to publish it, my new year wishes would be obsolete...

So here are the three most important lessons.

Testing *is* part of the development

Besides the obvious advantages of TDD, here are the main three:

- If the code is fully tested, there is no fear breaking it. To improve the code quality I had to rewrite huge portions of the code. I was safe because of the tests.

- When you develop any API, you often question yourself about the best way to expose a feature. If you try to follow the TDD method, you will quickly see the power of experimenting the code even before it is written. Indeed, it gives you a taste of how this would work.

- Actually, you may notice that I didn't speak about documentation. I believe that a code that is written the right way is self documented. Code never lies, comments sometimes do. Test cases are a good source of documentation.

Code quality

I definitely recommend reading clean code and clean coder from Robert C. Martin (Uncle Bob).

That was a real eye opener to me.

Time management

This is a personal project meaning that I have very little time to spend on it. I usually work from home when the rest of the house is sleeping but I also take all the little opportunities I can find to think about improvements and take notes.

I will never insist too much on the importance of note taking: if it is not written, it does not exist.

Working on GitHub is also a time saver as it is available from the web, I sometimes change one line directly in the source because I think of an improvement.

What's next?

Where we're going... we don't need roads!

I still didn't complete the project refactoring: my first objective is to restore a feature that is used on my blog. Indeed, I am a lazy developer and I don't want to write HTML. That's why I use markdown that is converted to render the articles using the library (see blog.js... I may need to apply ESLint on it too).

Then, I will continue to play with streams: I would like to develop my own XML SAX parser and XML writer. And I will finish the XML serialization I started a long time ago.

I have trillions of ideas... so stay tuned to the project!

I just received my WebStorm Open Source License.

I just received my WebStorm Open Source License.